Exploring the Difference Between Data Analytics and Advanced Data Analytics by SIFT Analytics

Ever found yourself at a crossroads trying to decide between data analytics and advanced data analytics for your business? It can be a bit daunting, but let’s break it down together. By the end, you’ll know exactly what each entails and how to make the right choice for your needs.

Introduction to Data Analytics

Think of data analytics as the first step in understanding your data. It’s all about transforming the organization’s data to extract useful info. You collect data, clean it up, transform it into a usable format, and then visualize it to spot trends and insights.

Data Analytics in Action

Picture this: a retail store wants to understand its sales performance over the past year. They collect and clean their sales data, transforming it into an easy-to-analyze format. Then, they model this data to identify trends, like which products sold the most and which months were the busiest. With these insights, they can make smart decisions, like boosting inventory for popular products during peak months.

Introduction to Advanced Data Analytics

Now, if you want to dive deeper, advanced data analytics is where things get really exciting. This involves more complex techniques and tools, like machine learning and AI, to gain even deeper insights and make more accurate predictions. Advanced analytics can even automate processes within your industry, supercharging your company’s capabilities.

Advanced Data Analytics in Action

Now, let’s say the same store wants to predict future sales and optimize their pricing strategy. They don’t just stop at sales data; they also collect customer demographics, competitor pricing, and marketing campaign data. Using machine learning algorithms, they build a predictive model that takes all these factors into account. This model can forecast future sales and suggest pricing strategies to maximize profits. The store can implement these strategies and monitor the results in real-time, with the system continuously updating the model as new data comes in.

The Role of SIFT Analytics

Here’s where SIFT Analytics comes in. We help businesses tackle the complexities of data analytics by offering both data analytics and advanced data analytics solutions. SIFT enables companies to integrate various data sources, apply modern analytics techniques, and visualize the results through powerful dashboards. This makes the analytics process simpler and helps businesses act decisively with better insights they gain.

Wrapping It Up

So, in a nutshell, data analytics helps you understand past data and make informed decisions, while advanced data analytics uses sophisticated techniques such as ML and AI to get deeper insights, accurate predictions, automation, and more. By understanding the differences between these two types of analytics and seeing how they can be applied in real-world scenarios, you can better leverage your data to achieve your goals. Whether you’re looking to improve inventory management or optimize pricing strategies, the power of analytics is undeniable.

Connect with SIFT Analytics

As organisations strive to meet the demands of the digital era, SIFT remains steadfast in its commitment to delivering transformative solutions. To explore digital transformation possibilities or learn more about SIFT’s pioneering work, contact the team for a complimentary consultation. Visit the website at www.sift-ag.com for additional information.

About SIFT Analytics

Get a glimpse into the future of business with SIFT Analytics, where smarter data analytics driven by smarter software solution is key. With our end-to-end solution framework backed by active intelligence, we strive towards providing clear, immediate and actionable insights for your organisation.

Headquartered in Singapore since 1999, with over 500 corporate clients, in the region, SIFT Analytics is your trusted partner in delivering reliable enterprise solutions, paired with best-of-breed technology throughout your business analytics journey. Together with our experienced teams, we will journey. Together with you to integrate and govern your data, to predict future outcomes and optimise decisions, and to achieve the next generation of efficiency and innovation.

Published by SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+65 6295 0112

SIFT Analytics Group

Explore our latest insights

Social Service Agencies (SSAs) often face challenges related to resource limitation and operational issues. Discover how data analytics can transform the social service sector across eight key areas, from optimizing fundraising efforts to enhancing patient care and facility management.

SIFT Analytics Group has collaborated with various SSAs to implement data analytics in a way that users doesn’t require technical expertise. This approach enables organizations to gain better insights into their operations, helping them create a more responsive and effective social service system.

Donations

Understand donor behavior, preferences, and trends to optimize fundraising efforts.

By analyzing donor demographics, patterns, and communication channels, organizations can tailor their campaigns for maximum impact.

Example: Analytics can identify segments of donors who are more likely to respond to specific appeals, allowing organizations to personalize their outreach strategies and increase donation conversion rates.

Nursing Home

Analyze patient data, staffing levels, and facility usage patterns.

By leveraging data analytics tools, nursing homes can optimize staffing schedules, anticipate patient needs, and enhance overall quality of care.

Example: Analytics can identify trends in patient health outcomes and medication usage, enabling nursing homes to adjust their care plans accordingly and improve overall resident satisfaction and well-being.

Facilities

Track maintenance needs, resource utilization, and facility usage patterns.

By monitoring data such as equipment performance, energy consumption, and space utilization, organizations can identify opportunities for cost savings and efficiency improvements.

Example: Analytics can help facilities identify maintenance issues, optimize energy usage to reduce costs, and allocate space more efficiently to meet high demand and maximize utilization rates.

Rehab

Analyze patient progress, treatment effectiveness, and therapy outcomes.

By tracking patient data such as rehabilitation exercises, mobility levels, and recovery milestones, rehab centers can personalize treatment plans and monitor progress more effectively.

Example: Analytics can help identify correlations between specific therapy interventions and patient outcomes, enabling rehab centers to tailor treatment protocols for individual patients and optimize rehabilitation strategies for better recovery results.

Finance

Gain insights into financial performance, budget allocation, and cost optimization.

By analyzing financial data such as revenue streams, expenses, and cash flow patterns, organizations can identify areas for improvement and make informed decisions.

Example: Analytics can highlight areas of overspending or inefficiency, enabling organizations to reallocate resources effectively and streamline financial processes for better fiscal management.

Operations

Identify bottlenecks, improving resource utilization, and enhancing overall efficiency.

By analyzing operational data such as workflow patterns, resource allocation, and performance metrics, organizations can identify areas for process optimization and implement targeted improvements.

Example: Analytics can help identify inefficiencies in workflow processes, enabling organizations to streamline operations, reduce costs, and improve service delivery.

Daycare

Optimize enrollment, scheduling, and staff allocation.

By analyzing attendance patterns, caregiver-to-child ratios, and parent feedback, daycares can improve operational efficiency and provide better care for children.

Example: Analytics can help daycare centers forecast demand for childcare services, allocate staff resources more effectively, and optimize scheduling to accommodate fluctuating enrollment levels and maintain high-quality care standards.

Social Media

Measure the effectiveness of social media efforts and engage with their target audience more strategically.

By analyzing social media metrics such as engagement rates, audience demographics, and content performance, organizations can refine their social media strategies to drive meaningful interactions and achieve their objectives.

Example: Analytics can identify the types of content that resonate most with followers, helping organizations create more engaging posts and increase their social media presence.

#AskSIFT

Learn more with SIFT Analytics.

Whether you have questions, need advice, or are looking for solutions to specific challenges in your organization, we’re ready to listen and offer guidance tailored to your needs.

Connect with SIFT Analytics

As organisations strive to meet the demands of the digital era, SIFT remains steadfast in its commitment to delivering transformative solutions. To explore digital transformation possibilities or learn more about SIFT’s pioneering work, contact the team for a complimentary consultation. Visit the website at www.sift-ag.com for additional information.

About SIFT Analytics

Get a glimpse into the future of business with SIFT Analytics, where smarter data analytics driven by smarter software solution is key. With our end-to-end solution framework backed by active intelligence, we strive towards providing clear, immediate and actionable insights for your organisation.

Headquartered in Singapore since 1999, with over 500 corporate clients, in the region, SIFT Analytics is your trusted partner in delivering reliable enterprise solutions, paired with best-of-breed technology throughout your business analytics journey. Together with our experienced teams, we will journey. Together with you to integrate and govern your data, to predict future outcomes and optimise decisions, and to achieve the next generation of efficiency and innovation.

Published by SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+65 6295 0112

SIFT Analytics Group

Explore our latest insights

Imagine what happens when someone who is passionate about their work and your company frequently runs into issues with their hardware or software. It leads to a bad experience and they eventually lose interest in their work and your organization. This results in poor employee experience that leads to higher attrition rates and occasionally a poor customer experience.

The success of an organization is not just dependent on the product or service they sell but is also based on the people who are part of it. Processes that stand in their way or slows them down hurts the employee experience.

Connect with SIFT Analytics

As organisations strive to meet the demands of the digital era, SIFT remains steadfast in its commitment to delivering transformative solutions. To explore digital transformation possibilities or learn more about SIFT’s pioneering work, contact the team for a complimentary consultation. Visit the website at www.sift-ag.com for additional information.

About SIFT Analytics

Get a glimpse into the future of business with SIFT Analytics, where smarter data analytics driven by smarter software solution is key. With our end-to-end solution framework backed by active intelligence, we strive towards providing clear, immediate and actionable insights for your organisation.

Headquartered in Singapore since 1999, with over 500 corporate clients, in the region, SIFT Analytics is your trusted partner in delivering reliable enterprise solutions, paired with best-of-breed technology throughout your business analytics journey. Together with our experienced teams, we will journey. Together with you to integrate and govern your data, to predict future outcomes and optimise decisions, and to achieve the next generation of efficiency and innovation.

Published by SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+65 6295 0112

SIFT Analytics Group

Explore our latest insights

Anticipated to influence the legal terrain of data, here are six trends in 2024 that are likely to shape the landscape in the years ahead.

1

Advancing Adoption of AI

The previous year witnessed the widespread embrace of generative artificial intelligence (AI), presenting governments and organizations with intricate dilemmas on adapting to AI’s challenges and opportunities. Many enterprises possess extensive data repositories, prompting the exploration of additional value and efficiencies through AI.

2

Increasing Complexity and Global Alignment of Privacy Regulations

The year 2023 marked the continual expansion of privacy regulations globally, with an increasing number of countries adopting comprehensive privacy laws. While each jurisdiction maintains its unique approach to privacy regulation, common elements are emerging across these laws.

3

Businesses Respond to a Shifting Cyber Risk Landscape

Businesses are facing heightened awareness regarding two significant risks – ransomware and insider threats. To tackle these emerging challenges, companies will require actionable insights to significantly enhance their ability to respond to future incidents.

4

The strengthening of

Data Portability rights

There is growing empowerment for companies to seamlessly move and transfer their data between platforms, fostering increased control and flexibility over their digital information. This evolution reflects a heightened emphasis on user-centric data management, promoting privacy and data autonomy in the digital landscape.

5

Rising Threat of Data Litigation

High-profile and extensive data breaches have always posed the risk of expensive and reputationally damaging mass litigation, and such claims persist. Data breaches and evolving case law and legislation are some of these recent trends indicating that data litigation should remain a primary concern for numerous organizations.

6

Businesses Globally Prioritize Acquiring Valuable Datasets

Persistent issues related to data, such as data ownership and data protection, will continue to be crucial in mergers and acquisitions (M&A). Moreover, new challenges have risen, such as when buyers seek to acquire artificial intelligence (AI) related assets, or when developments in international data transfers require consideration.

Connect with SIFT Analytics

As organisations strive to meet the demands of the digital era, SIFT remains steadfast in its commitment to delivering transformative solutions. To explore digital transformation possibilities or learn more about SIFT’s pioneering work, contact the team for a complimentary consultation. Visit the website at www.sift-ag.com for additional information.

About SIFT Analytics

Get a glimpse into the future of business with SIFT Analytics, where smarter data analytics driven by smarter software solution is key. With our end-to-end solution framework backed by active intelligence, we strive towards providing clear, immediate and actionable insights for your organisation.

Headquartered in Singapore since 1999, with over 500 corporate clients, in the region, SIFT Analytics is your trusted partner in delivering reliable enterprise solutions, paired with best-of-breed technology throughout your business analytics journey. Together with our experienced teams, we will journey. Together with you to integrate and govern your data, to predict future outcomes and optimise decisions, and to achieve the next generation of efficiency and innovation.

Published by SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+65 6295 0112

SIFT Analytics Group

Explore our latest insights

Companies insisting on sticking to outdated procurement methods, which lack data-driven insights and visibility in their supply chain, have a higher risk of overspending and operating an ineffective supply chain.

Discover how the Alteryx Procurement Analytics solution can assist your organization in enhancing procurement process visibility, reducing spending, and mitigating risks in the supply chain.

Measuring Procurement Contribution

Assessing the impact of procurement on the bottom line and overall profitability is a challenge.

Cost of Goods Sold Control

Controlling raw material costs is crucial, involving factors such as timing, product quality, and supplier relationships.

Tool Limitations

Procurement managers face limitations with tools like spreadsheets and spend-analysis software, struggling to extract valuable insights from relevant data sources.

Expanded Analytical Scope

Enable procurement managers to move beyond traditional spend analysis, encompassing areas like cost modeling, supplier risk/performance, and market intelligence.

Comprehensive Data Integration

By collecting data from diverse sources, procurement managers create a holistic view of material procurement, aiding in cost control and supply chain risk mitigation.

Long-Term Value

Identify cost-saving opportunities, opening new markets, and optimizing supply chain processes through predictive analytics tools.

Efficiency Gains

Assess vendor quality and maximize your spend.

Customer Experience

Ensure end product meets demand and serves customers’ needs.

Risk Reduction

Make confident procurement decisions to minimize costs while meeting demand in a constantly disrupted supply chain.

Connect with SIFT Analytics

As organisations strive to meet the demands of the digital era, SIFT remains steadfast in its commitment to delivering transformative solutions. To explore digital transformation possibilities or learn more about SIFT’s pioneering work, contact the team for a complimentary consultation. Visit the website at www.sift-ag.com for additional information.

About SIFT Analytics

Get a glimpse into the future of business with SIFT Analytics, where smarter data analytics driven by smarter software solution is key. With our end-to-end solution framework backed by active intelligence, we strive towards providing clear, immediate and actionable insights for your organisation.

Headquartered in Singapore since 1999, with over 500 corporate clients, in the region, SIFT Analytics is your trusted partner in delivering reliable enterprise solutions, paired with best-of-breed technology throughout your business analytics journey. Together with our experienced teams, we will journey. Together with you to integrate and govern your data, to predict future outcomes and optimise decisions, and to achieve the next generation of efficiency and innovation.

Published by SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+65 6295 0112

SIFT Analytics Group

Explore our latest insights

Data Explosion

Digital platforms and social media have led to exponential data growth, offering vast opportunities for data-driven decision-making.

Advanced Techniques

Predictive analytics, prescriptive analytics, and machine learning have revolutionized Data Analytics, providing deeper insights and optimized processes.

AI Integration

Artificial Intelligence and machine learning enhance automated data analysis and real-time decision-making, boosting efficiency.

Real-Time Analytics

Businesses are adopting real-time solutions for prompt decision-making, addressing critical events as they unfold.

Data Privacy and Ethics

Compliance with regulations like GDPR is vital. Ethical considerations, transparency, and bias are essential factors in data usage.

Data Quality

Ensuring accurate and integrated data from diverse sources remains a challenge, necessitating robust governance strategies.

Skill Gap

Demand for skilled data analysts outstrips supply, requiring expertise in statistics, programming, machine learning, and domain knowledge.

Process Optimization

Data Analytics streamlines workflows, identifies bottlenecks, and boosts productivity, enhancing operational efficiency.

Customer Insights

Understanding customer behavior enables targeted marketing, personalized experiences, and improved satisfaction.

Key Players

Major industry players like AWS and IBM offer comprehensive solutions. Firms such as SIFT provide consulting and offer end-to-end data analytics tools.

Industry Forecast

According to the Business Leaders review, the global Data Analytics market is set to reach $132.9 billion by 2026, driven by AI adoption, IoT, and real-time analytics, growing at a CAGR of 28.9%.

Impact of Developments

Privacy Regulations — Evolving laws influence data practices, emphasizing compliance and responsible data usage.

AI Advancements — AI integration enhances data processing and predictive capabilities, fostering innovation.

Ethical Focus — Heightened awareness may lead to frameworks addressing biases and ensuring transparency in Data Analytics practices.

In a nutshell, Data Analytics experiences unprecedented growth due to advanced techniques and AI integration. Challenges in data quality and skills are met with opportunities in process optimization and enriched customer insights. Future advancements will be guided by evolving regulations and ethical considerations, steering the industry toward innovation and responsible practices.

Connect with SIFT Analytics

As organisations strive to meet the demands of the digital era, SIFT remains steadfast in its commitment to delivering transformative solutions. To explore digital transformation possibilities or learn more about SIFT’s pioneering work, contact the team for a complimentary consultation. Visit the website at www.sift-ag.com for additional information.

About SIFT Analytics

Get a glimpse into the future of business with SIFT Analytics, where smarter data analytics driven by smarter software solution is key. With our end-to-end solution framework backed by active intelligence, we strive towards providing clear, immediate and actionable insights for your organisation.

Headquartered in Singapore since 1999, with over 500 corporate clients, in the region, SIFT Analytics is your trusted partner in delivering reliable enterprise solutions, paired with best-of-breed technology throughout your business analytics journey. Together with our experienced teams, we will journey. Together with you to integrate and govern your data, to predict future outcomes and optimise decisions, and to achieve the next generation of efficiency and innovation.

Published by SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+65 6295 0112

SIFT Analytics Group

Explore our latest insights

Philadelphia – Recent research by Qlik shows that enterprises are planning significant investments in technologies that enhance data fabrics to enable generative AI success, and are looking to a hybrid approach that incorporates generative AI with traditional AI to scale its impact across their organizations.

The “Generative AI Benchmark Report”, executed in August 2023 by Enterprise Technology Research (ETR) on behalf of Qlik, surveyed 200 C-Level executives, VPs, and Directors from Global 2000 firms across multiple industries. The survey explores how leaders are leveraging the generative AI tools they purchased, lessons learned, and where they are focusing to maximize their generative AI investments.

“Generative AI’s potential has ignited a wave of investment and interest both in discreet generative AI tools, and in technologies that help organizations manage risk, embrace complexity and scale generative AI and traditional AI for impact,” said James Fisher, Chief Strategy Officer at Qlik. “Our Generative AI Benchmark report clearly shows leading organizations understand that these tools must be supported by a trusted data foundation. That data foundation fuels the insights and advanced use cases where the power of generative AI and traditional AI together come to life.”

The report found that while the initial excitement of what generative AI can deliver remains, leaders understand they need to surround these tools with the right data strategies and technologies to fully realize their transformative potential. And while many are forging ahead with generative AI to alleviate competitive pressures and gain efficiencies, they are also looking for guidance on where to start and how to move forward quickly while keeping an eye on risk and governance issues.

Creating Value from Generative AI

Even with the market focus on generative AI, respondents noted they clearly see traditional AI still bringing ongoing value in areas like predictive analytics. Where they expect generative AI to help is in extending the power of AI beyond data scientists or engineers, opening up AI capabilities to a larger population. Leaders expect this approach will help them scale the ability to unlock deeper insights and find new, creative ways to solve problems much faster.

This vision of what’s possible with generative AI has driven an incredible level of investment. 79% of respondents have either purchased generative AI tools or invested in generative AI projects, and 31% say they plan to spend over $10 million on generative AI initiatives in the coming year. However, those investments run the risk of being siloed, since 44% of these organizations noted they lack a clear generative AI strategy.

Surrounding Generative AI with the Right Strategy and Support

When asked how they intend to approach generative AI, 68% said they plan to leverage public or open-source models refined with proprietary data, and 45% are considering building models from scratch with proprietary data.

Expertise in these areas is crucial to avoiding the widely reported data security, governance, bias and hallucination issues that can occur with generative AI. Respondents understand they need help, with 60% stating they plan to rely partially or fully on third-party expertise to close this gap.

Many organizations are also looking to data fabrics as a core part of their strategy to mitigate these issues. Respondents acknowledged their data fabrics either need upgrades or aren’t ready when it comes to generative AI. In fact, only 20% believe their data fabric is very/extremely well equipped to meet their needs for generative AI.

Given this, it’s no surprise that 73% expect to increase spend on technologies that support data fabrics. Part of that spend will need to focus on managing data volumes, since almost three quarters of respondents said they expect generative AI to increase the amount of data moved or managed on current analytics. The majority of respondents also noted that data quality, ML/AI tools, data governance, data integration and BI/Analytics all are important or very important areas to delivering a data fabric that enables generative AI success. Investments in these areas will help organizations remove some of the most common barriers to implementation per respondents, including regulation, data security and resources.

The Path to Generative AI Success – It’s All About the Data

While every organization’s AI strategy can and should be different, one fact remains the same: the best AI outcomes start with the best data. With the massive amount of data that needs to be curated, quality-assured, secured, and governed to support AI and construct useful generative AI models, a modern data fabric is essential. And once data is in place, the platform should deliver end-to-end, AI-enabled capabilities that help all users – regardless of skill level – get powerful insights with automation and assistance. Qlik enables customers to leverage AI in three critical ways:

To learn more about how Qlik is helping organizations drive success with AI, visit Qlik Staige.

Connect with SIFT Analytics

As organisations strive to meet the demands of the digital era, SIFT remains steadfast in its commitment to delivering transformative solutions. To explore digital transformation possibilities or learn more about SIFT’s pioneering work, contact the team for a complimentary consultation. Visit the website at www.sift-ag.com for additional information.

About SIFT Analytics

Get a glimpse into the future of business with SIFT Analytics, where smarter data analytics driven by smarter software solution is key. With our end-to-end solution framework backed by active intelligence, we strive towards providing clear, immediate and actionable insights for your organisation.

Headquartered in Singapore since 1999, with over 500 corporate clients, in the region, SIFT Analytics is your trusted partner in delivering reliable enterprise solutions, paired with best-of-breed technology throughout your business analytics journey. Together with our experienced teams, we will journey. Together with you to integrate and govern your data, to predict future outcomes and optimise decisions, and to achieve the next generation of efficiency and innovation.

Published by SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+65 6295 0112

SIFT Analytics Group

Explore our latest insights

Connect data sources, apps, business logic, analytics and people

Train your models in a trusted, controlled environment

Reduce guesswork with visualizations and predictive insights

Chat-based summary generation

What should our staffing levels be next year?

┗ What do we need to do now to meet those needs?

What do we expect our revenue to be in Q3 for each region?

┗ What actions can we take to maximize revenue?

Which inventory outages might occur next year?

┗ How can we ensure we have the right products on hand?

Which customers should we target going forward?

┗Which products should we offer them?

Which capital investments should we make in Q2?

┗ Which characteristics on an asset drive the highest ROI?

What do we project our expenses to be next quarter by category?

┗ What are the key factors driving expenses?

Which high-value employees are at an increased risk of leaving?

┗ What are the specific factors driving that decision?

How should we plan our capacity next year to best meet demand?

┗ How can we optimise our processes to reduce bottlenecks?

Which opportunities are likely to close this quarter?

┗ How do we create a higher close rate?

Prepare quality governed data for generative AI you can trust.

Bring AI to BI for analytics that stay one step ahead.

Build and deploy AI for advanced use cases.

Connect with SIFT Analytics

As organisations strive to meet the demands of the digital era, SIFT remains steadfast in its commitment to delivering transformative solutions. To explore digital transformation possibilities or learn more about SIFT’s pioneering work, contact the team for a complimentary consultation. Visit the website at www.sift-ag.com for additional information.

About SIFT Analytics

Get a glimpse into the future of business with SIFT Analytics, where smarter data analytics driven by smarter software solution is key. With our end-to-end solution framework backed by active intelligence, we strive towards providing clear, immediate and actionable insights for your organisation.

Headquartered in Singapore since 1999, with over 500 corporate clients, in the region, SIFT Analytics is your trusted partner in delivering reliable enterprise solutions, paired with best-of-breed technology throughout your business analytics journey. Together with our experienced teams, we will journey. Together with you to integrate and govern your data, to predict future outcomes and optimise decisions, and to achieve the next generation of efficiency and innovation.

Contact SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+65 6295 0112

SIFT Analytics Group

Explore our latest insights

Introducing Alteryx AiDIN

Generative AI meets trusted analytics, so you can go from insights to impact even faster.

Now you can have the best of both worlds: Alteryx AiDIN combines the best of AI, machine learning, and generative AI with an end-to-end, unified, enterprise-grade analytics cloud platform.

Why Alteryx AiDIN?

Quickly generate text-based content, predictions, recommendations, and scenarios that can inform critical business decisions.

Innovate faster by discovering new patterns in data that were previously undiscoverable.

Automate repetitive content creation tasks and reduce manual effort.

Ensure that data and analytics processes are transparent, auditable, and compliant with regulatory requirements.

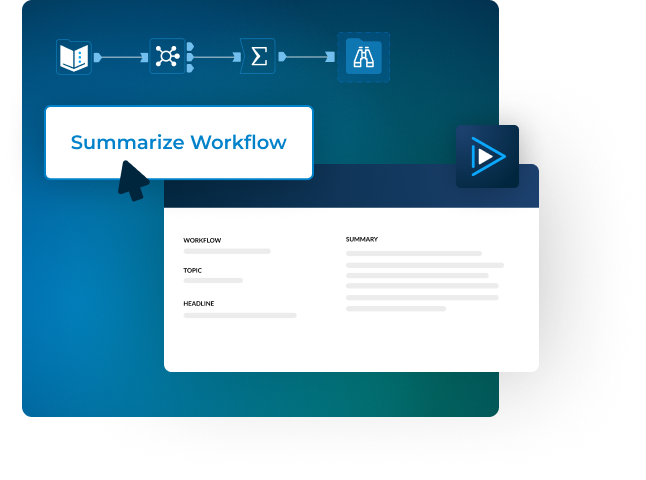

Take a look at two of our newest features.

Make documenting workflows a breeze with this new feature that uses OpenAI’s GPT API. Let the tool create concise summaries for you of a workflow’s purpose, inputs, outputs, and key logic steps.

Once you’ve created your analytics insights, select your preferred form of communication (email, PowerPoint, etc.) and choose your audience. Then watch as our generative AI automatically drafts a customized summary of your work.

Connect with SIFT Analytics

As organisations strive to meet the demands of the digital era, SIFT remains steadfast in its commitment to delivering transformative solutions. To explore digital transformation possibilities or learn more about SIFT’s pioneering work, contact the team for a complimentary consultation. Visit the website at www.sift-ag.com for additional information.

About SIFT Analytics

Get a glimpse into the future of business with SIFT Analytics, where smarter data analytics driven by smarter software solution is key. With our end-to-end solution framework backed by active intelligence, we strive towards providing clear, immediate and actionable insights for your organisation.

Headquartered in Singapore since 1999, with over 500 corporate clients, in the region, SIFT Analytics is your trusted partner in delivering reliable enterprise solutions, paired with best-of-breed technology throughout your business analytics journey. Together with our experienced teams, we will journey. Together with you to integrate and govern your data, to predict future outcomes and optimise decisions, and to achieve the next generation of efficiency and innovation.

Contact SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+65 6295 0112

SIFT Analytics Group

Explore our latest insights

Three decades into the data revolution, we find ourselves asking an existential question: What happened to the promise of customer 360?

The ability to get more customer data was supposed to fundamentally change the relationship between customers and brands. Companies were going to be able to offer targeted, meaningful engagements that would multiply average deal size and slash time to close. Predictive algorithms would make it possible for brands to give their customers everything they needed — before they knew they needed it — sending customer loyalty and lifetime value (LTV) through the roof.

So what went wrong?

When digital transformation became a common objective, most organizations treated it as nothing more than a perfunctory series of boxes to check if they wanted to keep up with the competition. Even as companies invested in expensive tools to generate and consume data, they still struggled to see the promised value in those investments. In fact, a shocking 77% of organizations report that customer insights have failed to become a source of growth and competitive differentiation.

Realizing the promise of customer 360 requires a change in the way we think about customer data. The companies who thrive will be the ones who adopt a holistic perspective and treat data as a long-term strategic asset that underpins every business decision. In short, healthy businesses will be the ones who prioritize data health.

Connect with SIFT Analytics

As organisations strive to meet the demands of the digital era, SIFT remains steadfast in its commitment to delivering transformative solutions. To explore digital transformation possibilities or learn more about SIFT’s pioneering work, contact the team for a complimentary consultation. Visit the website at www.sift-ag.com for additional information.

About SIFT Analytics

Get a glimpse into the future of business with SIFT Analytics, where smarter data analytics driven by smarter software solution is key. With our end-to-end solution framework backed by active intelligence, we strive towards providing clear, immediate and actionable insights for your organisation.

Headquartered in Singapore since 1999, with over 500 corporate clients, in the region, SIFT Analytics is your trusted partner in delivering reliable enterprise solutions, paired with best-of-breed technology throughout your business analytics journey. Together with our experienced teams, we will journey. Together with you to integrate and govern your data, to predict future outcomes and optimise decisions, and to achieve the next generation of efficiency and innovation.

Published by SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+65 6295 0112

SIFT Analytics Group

Explore our latest insights