Exploring the Difference Between Data Analytics and Advanced Data Analytics by SIFT Analytics

Ever found yourself at a crossroads trying to decide between data analytics and advanced data analytics for your business? It can be a bit daunting, but let’s break it down together. By the end, you’ll know exactly what each entails and how to make the right choice for your needs.

Introduction to Data Analytics

Think of data analytics as the first step in understanding your data. It’s all about transforming the organization’s data to extract useful info. You collect data, clean it up, transform it into a usable format, and then visualize it to spot trends and insights.

Data Analytics in Action

Picture this: a retail store wants to understand its sales performance over the past year. They collect and clean their sales data, transforming it into an easy-to-analyze format. Then, they model this data to identify trends, like which products sold the most and which months were the busiest. With these insights, they can make smart decisions, like boosting inventory for popular products during peak months.

Introduction to Advanced Data Analytics

Now, if you want to dive deeper, advanced data analytics is where things get really exciting. This involves more complex techniques and tools, like machine learning and AI, to gain even deeper insights and make more accurate predictions. Advanced analytics can even automate processes within your industry, supercharging your company’s capabilities.

Advanced Data Analytics in Action

Now, let’s say the same store wants to predict future sales and optimize their pricing strategy. They don’t just stop at sales data; they also collect customer demographics, competitor pricing, and marketing campaign data. Using machine learning algorithms, they build a predictive model that takes all these factors into account. This model can forecast future sales and suggest pricing strategies to maximize profits. The store can implement these strategies and monitor the results in real-time, with the system continuously updating the model as new data comes in.

The Role of SIFT Analytics

Here’s where SIFT Analytics comes in. We help businesses tackle the complexities of data analytics by offering both data analytics and advanced data analytics solutions. SIFT enables companies to integrate various data sources, apply modern analytics techniques, and visualize the results through powerful dashboards. This makes the analytics process simpler and helps businesses act decisively with better insights they gain.

Wrapping It Up

So, in a nutshell, data analytics helps you understand past data and make informed decisions, while advanced data analytics uses sophisticated techniques such as ML and AI to get deeper insights, accurate predictions, automation, and more. By understanding the differences between these two types of analytics and seeing how they can be applied in real-world scenarios, you can better leverage your data to achieve your goals. Whether you’re looking to improve inventory management or optimize pricing strategies, the power of analytics is undeniable.

Connect with SIFT Analytics

As organisations strive to meet the demands of the digital era, SIFT remains steadfast in its commitment to delivering transformative solutions. To explore digital transformation possibilities or learn more about SIFT’s pioneering work, contact the team for a complimentary consultation. Visit the website at www.sift-ag.com for additional information.

About SIFT Analytics

Get a glimpse into the future of business with SIFT Analytics, where smarter data analytics driven by smarter software solution is key. With our end-to-end solution framework backed by active intelligence, we strive towards providing clear, immediate and actionable insights for your organisation.

Headquartered in Singapore since 1999, with over 500 corporate clients, in the region, SIFT Analytics is your trusted partner in delivering reliable enterprise solutions, paired with best-of-breed technology throughout your business analytics journey. Together with our experienced teams, we will journey. Together with you to integrate and govern your data, to predict future outcomes and optimise decisions, and to achieve the next generation of efficiency and innovation.

Published by SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+65 6295 0112

SIFT Analytics Group

Explore our latest insights

Social Service Agencies (SSAs) often face challenges related to resource limitation and operational issues. Discover how data analytics can transform the social service sector across eight key areas, from optimizing fundraising efforts to enhancing patient care and facility management.

SIFT Analytics Group has collaborated with various SSAs to implement data analytics in a way that users doesn’t require technical expertise. This approach enables organizations to gain better insights into their operations, helping them create a more responsive and effective social service system.

Donations

Understand donor behavior, preferences, and trends to optimize fundraising efforts.

By analyzing donor demographics, patterns, and communication channels, organizations can tailor their campaigns for maximum impact.

Example: Analytics can identify segments of donors who are more likely to respond to specific appeals, allowing organizations to personalize their outreach strategies and increase donation conversion rates.

Nursing Home

Analyze patient data, staffing levels, and facility usage patterns.

By leveraging data analytics tools, nursing homes can optimize staffing schedules, anticipate patient needs, and enhance overall quality of care.

Example: Analytics can identify trends in patient health outcomes and medication usage, enabling nursing homes to adjust their care plans accordingly and improve overall resident satisfaction and well-being.

Facilities

Track maintenance needs, resource utilization, and facility usage patterns.

By monitoring data such as equipment performance, energy consumption, and space utilization, organizations can identify opportunities for cost savings and efficiency improvements.

Example: Analytics can help facilities identify maintenance issues, optimize energy usage to reduce costs, and allocate space more efficiently to meet high demand and maximize utilization rates.

Rehab

Analyze patient progress, treatment effectiveness, and therapy outcomes.

By tracking patient data such as rehabilitation exercises, mobility levels, and recovery milestones, rehab centers can personalize treatment plans and monitor progress more effectively.

Example: Analytics can help identify correlations between specific therapy interventions and patient outcomes, enabling rehab centers to tailor treatment protocols for individual patients and optimize rehabilitation strategies for better recovery results.

Finance

Gain insights into financial performance, budget allocation, and cost optimization.

By analyzing financial data such as revenue streams, expenses, and cash flow patterns, organizations can identify areas for improvement and make informed decisions.

Example: Analytics can highlight areas of overspending or inefficiency, enabling organizations to reallocate resources effectively and streamline financial processes for better fiscal management.

Operations

Identify bottlenecks, improving resource utilization, and enhancing overall efficiency.

By analyzing operational data such as workflow patterns, resource allocation, and performance metrics, organizations can identify areas for process optimization and implement targeted improvements.

Example: Analytics can help identify inefficiencies in workflow processes, enabling organizations to streamline operations, reduce costs, and improve service delivery.

Daycare

Optimize enrollment, scheduling, and staff allocation.

By analyzing attendance patterns, caregiver-to-child ratios, and parent feedback, daycares can improve operational efficiency and provide better care for children.

Example: Analytics can help daycare centers forecast demand for childcare services, allocate staff resources more effectively, and optimize scheduling to accommodate fluctuating enrollment levels and maintain high-quality care standards.

Social Media

Measure the effectiveness of social media efforts and engage with their target audience more strategically.

By analyzing social media metrics such as engagement rates, audience demographics, and content performance, organizations can refine their social media strategies to drive meaningful interactions and achieve their objectives.

Example: Analytics can identify the types of content that resonate most with followers, helping organizations create more engaging posts and increase their social media presence.

#AskSIFT

Learn more with SIFT Analytics.

Whether you have questions, need advice, or are looking for solutions to specific challenges in your organization, we’re ready to listen and offer guidance tailored to your needs.

Connect with SIFT Analytics

As organisations strive to meet the demands of the digital era, SIFT remains steadfast in its commitment to delivering transformative solutions. To explore digital transformation possibilities or learn more about SIFT’s pioneering work, contact the team for a complimentary consultation. Visit the website at www.sift-ag.com for additional information.

About SIFT Analytics

Get a glimpse into the future of business with SIFT Analytics, where smarter data analytics driven by smarter software solution is key. With our end-to-end solution framework backed by active intelligence, we strive towards providing clear, immediate and actionable insights for your organisation.

Headquartered in Singapore since 1999, with over 500 corporate clients, in the region, SIFT Analytics is your trusted partner in delivering reliable enterprise solutions, paired with best-of-breed technology throughout your business analytics journey. Together with our experienced teams, we will journey. Together with you to integrate and govern your data, to predict future outcomes and optimise decisions, and to achieve the next generation of efficiency and innovation.

Published by SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+65 6295 0112

SIFT Analytics Group

Explore our latest insights

ลองคิดดูว่าธุรกิจของคุณจะเติบโตได้รวดเร็วแค่ไหน หากคุณได้รับข้อมูลความต้องการของลูกค้าแบบเจาะลึกในทุกวัน ? รู้หรือไม่ว่านักการตลาดในองค์กรชั้นนำ ต่างก็ได้รับข้อมูลเหล่านี้ทุกวันโดยที่พวกเขาแทบไม่ต้องลงมือทำอะไรเลย จากการใช้ระบบการวิเคราะห์ข้อมูลแบบอัตโนมัติ หรือ Analytics Automation ที่ส่งมอบรีพอร์ทข้อมูลเทรนด์การตลาด และการพยากรณ์ข้อมูลเชิงลึกแบบอัตโนมัติ ซึ่งช่วยให้พวกเขาสามารถพัฒนาธุรกิจให้เติบโตได้อย่างก้าวกระโดด

ในขณะที่ผู้นำการตลาดต้องการข้อมูลเชิงลึกที่รวดเร็ว เพื่อสร้างการตัดสินใจทางธุรกิจที่แม่นยำมากยิ่งขึ้นนั้น หลายท่านยังคงเผชิญปัญหาด้านงบประมาณและกำลังคน ที่อาจทำให้ไม่มีเวลาและทรัพยากรเพียงพอในการค้นหาข้อมูลเชิงลึกได้รวดเร็วและตรงเป้าหมายตามที่ต้องการ จากคำแนะนำของ Alteryx จึงมี 4 วิธีที่จะช่วยเพิ่มมูลค่าทางธุรกิจของคุณด้วยการใช้ Analytics Automation เพื่อให้คุณก้าวมาเป็นนักการตลาดชั้นนำและพัฒนาธุรกิจให้เหนือคู่แข่งได้อย่างก้าวกระโดด

เพื่อเตรียมความพร้อมของทีมการตลาดในยุคไร้คุ้กกี้ (Cookiesless world) ที่กำลังใกล้เข้ามา การติดตามลูกค้า คู่แข่ง และแคมเปญจะต้องนำข้อมูลทั้งหมดมารวมกันเพื่อให้มองเห็นภาพของลูกค้าได้ครบถ้วน ด้วย Analytics Automation จะทำให้คุณสามารถเข้าถึงและนำเข้าข้อมูลจากทุกแหล่งข้อมูลได้อย่างรวดเร็ว ไม่ว่าจะเป็นข้อมูลบนคลาวด์ ข้อมูลภายในองค์กร first-party data และข้อมูลเชิงลึกจากแอพพลิเคชั่นทางการตลาด (Web Analytics เครื่องมือ SEO CRM และอื่น ๆ) เพื่อรับข้อมูลของลูกค้าแบบ 360 องศาอย่างรวดเร็ว ส่งผลให้ทีมการตลาดที่มีข้อมูลเชิงลึกสามารถตอบสนองต่อการเปลี่ยนแปลงของตลาดแบบเรียลไทม์ ซึ่งย่อมดีกว่าการเก็บข้อมูลเอาไว้เพียงแค่แหล่งเดียว

ยกตัวอย่างการเก็บข้อมูลเอาไว้ใน Spreadsheet ที่เราอาจคุ้นเคยกัน ซึ่งหลายคนอาจจะยังไม่รู้ว่า จริง ๆ แล้ว Spreadsheet ส่วนใหญ่มีขีดจำกัดประมาณหนึ่งล้านแถว อาจดูเหมือนเยอะ แต่หลังจากที่ใช้จนเต็มแล้ว Spreadsheet จะใช้งานไม่ได้อีก ในขณะที่โซลูชัน Analytics Automation นั้นไม่มีขีดจำกัด หมายความว่าคุณสามารถประมวลผลข้อมูลลูกค้า ผลิตภัณฑ์ หรือ POS (จุดขาย) ทั้งหมดได้ในที่เดียว

จากข้อมูลของ Gartner พบว่าแม้ในปัจจุบันการวิเคราะห์ข้อมูลจะมีบทบาทค่อนข้างมาก แต่อันที่จริงแล้ว การวิเคราะห์ข้อมูลมีอิทธิพลต่อการตัดสินใจทางการตลาดเพียงแค่ 53% เท่านั้น ทีมการตลาดส่วนใหญ่ยังคงต้องการความช่วยเหลือในการแปลข้อมูลให้เป็นมูลค่าทางธุรกิจ ซึ่งมักเกิดจากการไม่มีจำนวนคนหรือเวลามากเพียงพอ และทำให้การทำงานของทีมถดถอยลง ซึ่ง Analytics Automation หรือ โซลูชันการวิเคราะห์ข้อมูลอัตโนมัติที่ใช้งานง่ายสามารถช่วยให้ทีมการตลาดของคุณทำงานได้มากขึ้นโดยใช้เวลาน้อยลง

โซลูชันเหล่านี้ไม่เพียงแต่จะช่วยประหยัดเวลาหลายร้อยชั่วโมงจากการทำให้กระบวนการทำความสะอาดและเตรียมข้อมูลที่น่าเบื่อเป็นไปโดยอัตโนมัติ แต่ยังช่วยให้ทีมของคุณค้นพบข้อมูลเชิงลึกได้ด้วยตนเอง ซึ่งหมายความว่าคุณจะไม่ต้องพึ่งพาทีมข้อมูล หรือ Data Analyst เพื่อรอแดชบอร์ดอีกต่อไป เพราะบางครั้งการดูข้อมูลจากแดชบอร์ดเพียงอย่างเดียวอาจมีความล่าช้าหรือบางครั้งก็ใช้งานไม่ได้ ซึ่งก็ทำให้คุณไม่ได้รับข้อมูลเชิงลึกตามที่ต้องการ และการทำให้กระบวนการวิเคราะห์ที่ใช้เวลานานเป็นอัตโนมัติ จะช่วยให้นักการตลาดและนักวิเคราะห์ของคุณมีเวลาในการทำงานเชิงกลยุทธ์มากขึ้นนั่นเอง

ความสำเร็จในด้านการตลาดจำเป็นต้องเอาชนะคู่แข่งด้วยความสามารถในการคาดการณ์สถานการณ์ของตลาด และรู้ทันความเคลื่อนไหวของคู่แข่งไปพร้อม ๆ กัน หมายความว่าคุณต้องมีความรวดเร็วในการก้าวให้ทันในทุกสถานการณ์ แต่หากไม่มีข้อมูลเชิงลึกที่ถูกต้อง ทีมการตลาดของคุณจะไม่สามารทำสิ่งเหล่านี้ได้ ไม่ว่าจะเป็นการเพิ่มประสิทธิภาพการกำหนดเป้าหมาย ราคา หรือกลยุทธ์ ด้วย Analytics Automation คุณสามารถค้นหาสิ่งที่จะนำเสนอให้แก่ลูกค้าได้อย่างเหมาะสมและตรงจุด ซึ่งจะเป็นสิ่งที่กระตุ้น Conversion rate และรายได้มากที่สุดอย่างรวดเร็ว

นอกจากนี้หากคุณมีนักการตลาดที่เชี่ยวชาญด้านข้อมูลอยู่ในทีม คุณยังสามารถใช้ประโยชน์จากฟีเจอร์ AutoML เพื่อสร้างโมเดล Machine Learning ได้อย่างรวดเร็วและง่ายดาย เพื่อเพิ่มประสิทธิภาพการจัดสรรงบประมาณ วิเคราะห์โอกาสในการขาย คาดการณ์ (และป้องกัน) การเลิกใช้งานของลูกค้า (Churn rate) และทำให้การกำหนดราคาของคุณให้ตอบโจทย์ได้อีกด้วย

เป้าหมายของการทำการตลาดคือการส่งมอบ ROI ในระยะสั้น และต้องสร้างมูลค่าเชิงกลยุทธ์ระยะยาวควบคู่กันไป ซึ่งมักเป็นสิ่งที่กดดันทีมการตลาดเป็นอย่างมาก การนำ Analytics Automation เข้ามาใช้สามารถช่วยให้คุณสร้างมูลค่าทางธุรกิจได้ในทันที เพราะระบบเหล่านี้สามารถใช้งานได้ง่าย สามารถนำไปปรับใช้ได้อย่างรวดเร็ว ช่วยให้คุณประหยัดต้นทุนและเวลาในการเทรนนิ่งการใช้งานระบบได้ และยิ่งถ้าระบบ Analytics Automation ที่คุณใช้ มี Community ให้ผู้ใช้งานสามารถแลกเปลี่ยนความรู้กันได้ คุณก็จะสามารถเรียนรู้จากผู้ใช้งานท่านอื่นได้อีกด้วย

การเลือกใช้เทคโนโลยีที่เหมาะสมไม่เพียงช่วยให้คุณส่งมอบ ROI และมูลค่าทางธุรกิจได้อย่างรวดเร็ว แต่ยังสามารถรองรับสิ่งใหม่ ๆ ที่จะเกิดขึ้นในอนาคตได้ด้วย ไม่ว่าจะเป็นการซิงค์ข้อมูลเอาไว้บนคลาวด์ของคุณ รวมถึงเป็นมิตรกับ AI ในอนาคตนั่นเอง

จากทั้ง 4 ข้อที่กล่าวไป เราจึงเห็นได้ว่าการนำระบบ Analytics Automation มาใช้ ถือเป็นเคล็ดลับในการทำสิ่งต่าง ๆ ได้มากขึ้นโดยใช้เวลาน้อยลง ช่วยเร่งการสร้างมูลค่าทางธุรกิจ ซึ่งหากคุณกำลังมองหา Analytics Automation ดี ๆ ที่สามารถทำงานได้ครอบคลุม SIFT Analytics Group ขอแนะนำ Alteryx Analytic Process Automation (APA) Platform ซึ่งจะมาตอบโจทย์ดังกล่าวด้วยความโดนเด่นที่หลากหลาย เช่น

Alteryx – Intuitive Drag and Drop UI

สามารถใช้งานได้ง่ายด้วยการลากและวาง

Alteryx Analytic Process Automation (APA) Platform คือ เครื่องมือสำคัญที่จะทำให้ทุกคนในองค์กร สามารถเข้าถึงแหล่งข้อมูลที่หลากหลาย นำเข้า ทำความสะอาด และจัดเตรียมข้อมูลให้พร้อมมาวิเคราะห์ได้โดยง่ายในรูปแบบ drag-and-drop ช่วยให้ผู้ใช้งานวิเคราะห์ข้อมูลด้วยโมเดล Machine Learning สร้าง report หรือส่งข้อมูลที่พร้อมใช้งาน ไปยัง Visualization Tools ต่างๆได้ด้วยตนเอง

เชื่อมต่อกับ SIFT Analytics

ในยุคที่องค์กรต่าง ๆ ต้องการก้าวให้ทันทุกความเปลี่ยนแปลงด้านดิจิทัล SIFT เองยังคงมุ่งมั่นในการนำเสนอโซลูชันที่จะมาสร้างการเปลี่ยนแปลงทางธุรกิจให้กับลูกค้าของเราอยู่เสมอ หากต้องการเรียนรู้เพิ่มเติมเพื่อเริ่มต้นการเปลี่ยนแปลงทางดิจิทัลกับ SIFT สามารถติดต่อทีมงานของเราเพื่อขอคำปรึกษาฟรี หรือยี่ยมชมเว็บไซต์ของเราเพื่อค้นหาข้อมูลเพิ่มเติมได้ที่ www.sift-ag.com

เกี่ยวกับ SIFT Analytics

ก้าวทันอนาคตของธุรกิจอย่างชาญฉลาดยิ่งขึ้นด้วย SIFT Analytics ผู้ให้บริการการวิเคราะห์ข้อมูลซึ่งขับเคลื่อนโดยโซลูชันซอฟต์แวร์แบบครบวงจรและถูกพัฒนาด้วยระบบอัฉริยะเชิงรุก (Active intelligence) ที่ล้ำสมัย เรามุ่งมั่นที่จะมอบข้อมูลเชิงลึกที่ชัดเจนและนำไปใช้ได้ทันทีสำหรับองค์กรของคุณ

เรามีสำนักงานใหญ่ในสิงคโปร์ตั้งแต่ปี 1999 และมีลูกค้าองค์กรมากกว่า 500 รายในภูมิภาค SIFT Analytics เป็นพันธมิตรที่เชื่อถือได้ของคุณในการนำเสนอโซลูชันระดับองค์กรที่เชื่อถือได้ จับคู่กับเทคโนโลยีที่ดีที่สุดตลอดเส้นทางการวิเคราะห์ธุรกิจของคุณ เราจะเดินทางร่วมกับทีมงานที่มีประสบการณ์ของเรา ร่วมกับคุณเพื่อบูรณาการและควบคุมข้อมูลของคุณ เพื่อคาดการณ์ผลลัพธ์ในอนาคตและเพิ่มประสิทธิภาพการตัดสินใจ และเพื่อให้บรรลุประสิทธิภาพและนวัตกรรมรุ่นต่อไป

เผยแพร่โดย SIFT Analytics – SIFT Thailand Marketing Team

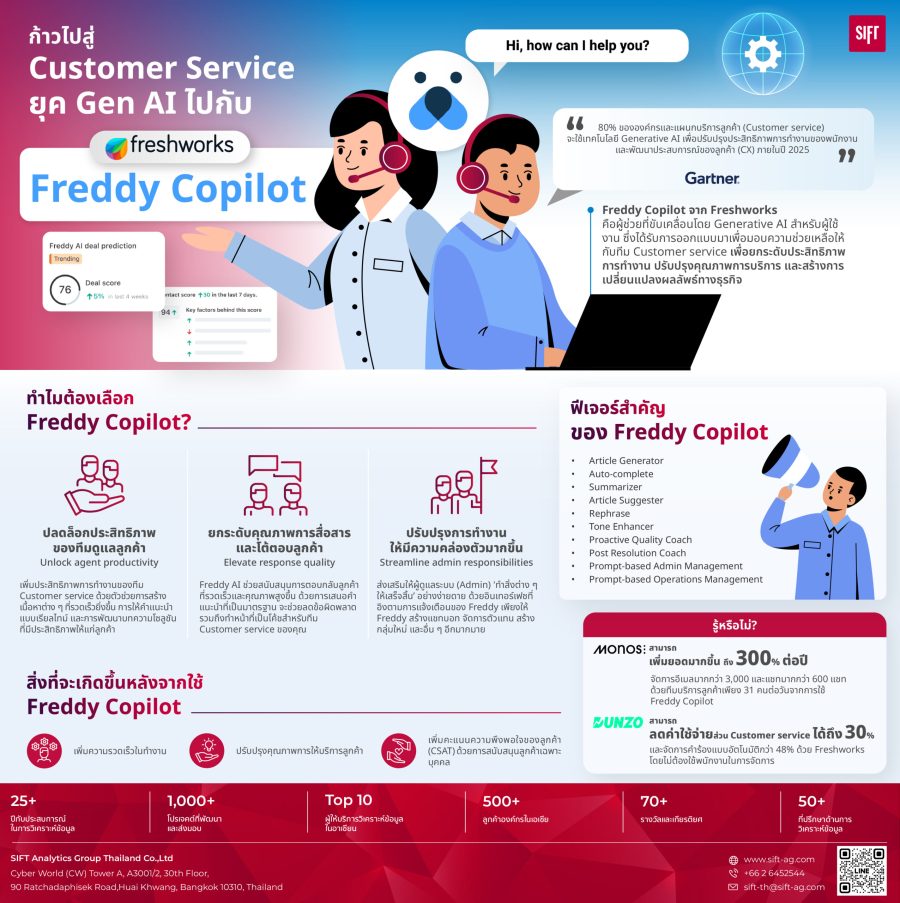

“80% ขององค์กรและแผนกบริการลูกค้า (Customer service) จะใช้เทคโนโลยี Generative AI เพื่อปรับปรุงประสิทธิภาพการทำงานของพนักงานและพัฒนาประสบการณ์ของลูกค้า (CX) ภายในปี 2025” – Gartner, Inc

ด้วยพลังของ Generative AI ที่ยังคงมาแรงอย่างต่อเนื่องจนถึงปี 2024 ทำให้เราเห็นได้ชัดว่าไม่ว่าจะอุตสาหกรรมใด รวมถึงการทำงานในแทบทุกแผนกของหลายองค์กร ต่างก็นำผู้ช่วยอัจฉริยะอย่าง Generative AI เข้ามาช่วยในการทำงาน เพื่อพัฒนาการทำงานให้รวดเร็ว เป็นอัตโนมัติ ในขณะเดียวกันก็ยังสามารถลดต้นทุน ส่งผลให้เกิดผลลัพธ์และสร้างประสบการณ์ของลูกค้า (Customer Experience) ให้ดีขึ้นด้วยเช่นกัน

และหากพูดถึงการทำงานด้

หากองค์กรของคุณเป็นอีกองค์กรที่ต้องการก้าวเข้าสู่ Customer service ยุคใหม่ SIFT Analytics Group ขอแนะนำ Freddy Copilot จาก Freshworks ซึ่งได้รับการออกแบบมาเพื่อมอบความช่วยเหลือให้กับทีม Customer service ซึ่งขับเคลื่อนโดย Generative AI เพื่อยกระดับประสิทธิภาพการทำงาน ปรับปรุงคุณภาพการบริการ และสร้างการเปลี่ยนแปลงผลลัพธ์ทางธุรกิจ

1. ปลดล็อกประสิทธิภาพของทีมดูแลลูกค้า (Unlock agent productivity)

เพิ่มประสิทธิภาพการทำงานของทีม Customer service ด้วยตัวช่วยการสร้างเนื้อหาต่าง ๆ ที่รวดเร็วยิ่งขึ้น การให้คำแนะนำแบบเรียลไทม์ และการพัฒนาบทความโซลูชันที่มีประสิทธิภาพให้แก่ลูกค้า

ฟีเจอร์สำคัญ

Summarizer

2. ยกระดับคุณภาพการสื่อสารและโต้ตอบลูกค้า (Elevate response quality)

Freddy AI ช่วยสนับสนุนการตอบกลับลูกค้าที่รวดเร็วและคุณภาพสูงขึ้น ด้วยการเสนอคำแนะนำที่เป็นมาตรฐาน จะช่วยลดข้อผิดพลาด รวมถึงทำหน้าที่เป็นโค้ชสำหรับทีม Customer service ของคุณ

ฟีเจอร์สำคัญ

Post Resolution Quality Coach

3. ปรับปรุงการทำงานให้มีความคล่องตัวมากขึ้น (Streamline admin responsibilities)

ส่งเสริมให้ผู้ดูแลระบบ (Admin) ‘ทำสิ่งต่าง ๆ ให้เสร็จสิ้น’ อย่างง่ายดาย ด้วยอินเทอร์เฟซที่อิงตามการแจ้งเตือนของ Freddy เพียงให้ Freddy สร้างแชทบอท จัดการตัวแทน สร้างกลุ่มใหม่ และอื่น ๆ อีกมากมาย

ฟีเจอร์สำคัญ

ที่มา Freddy Copilot powered by GenAI

หากท่านต้องการทราบข้อมูลเพิ่มเติมเกี่ยวกับ Freddy Copilot และ Freshworks สามารถติดต่อขอคำปรึกษาจากทีมงานของ SIFT Analytics Group ได้เลยตอนนี้ เพื่อก้าวไปสู่ Customer Service ยุค Gen AI และยกระดับการบริการลูกค้าให้เหนือกว่าคู่แข่งในตลาดไปด้วยกัน

ที่มาของเนื้อหา: Freddy Copilot powered by GenAI

เชื่อมต่อกับ SIFT Analytics

ในยุคที่องค์กรต่าง ๆ ต้องการก้าวให้ทันทุกความเปลี่ยนแปลงด้านดิจิทัล SIFT เองยังคงมุ่งมั่นในการนำเสนอโซลูชันที่จะมาสร้างการเปลี่ยนแปลงทางธุรกิจให้กับลูกค้าของเราอยู่เสมอ หากต้องการเรียนรู้เพิ่มเติมเพื่อเริ่มต้นการเปลี่ยนแปลงทางดิจิทัลกับ SIFT สามารถติดต่อทีมงานของเราเพื่อขอคำปรึกษาฟรี หรือยี่ยมชมเว็บไซต์ของเราเพื่อค้นหาข้อมูลเพิ่มเติมได้ที่ www.sift-ag.com

เกี่ยวกับ SIFT Analytics

ก้าวทันอนาคตของธุรกิจอย่างชาญฉลาดยิ่งขึ้นด้วย SIFT Analytics ผู้ให้บริการการวิเคราะห์ข้อมูลซึ่งขับเคลื่อนโดยโซลูชันซอฟต์แวร์แบบครบวงจรและถูกพัฒนาด้วยระบบอัฉริยะเชิงรุก (Active intelligence) ที่ล้ำสมัย เรามุ่งมั่นที่จะมอบข้อมูลเชิงลึกที่ชัดเจนและนำไปใช้ได้ทันทีสำหรับองค์กรของคุณ

เรามีสำนักงานใหญ่ในสิงคโปร์ตั้งแต่ปี 1999 และมีลูกค้าองค์กรมากกว่า 500 รายในภูมิภาค SIFT Analytics เป็นพันธมิตรที่เชื่อถือได้ของคุณในการนำเสนอโซลูชันระดับองค์กรที่เชื่อถือได้ จับคู่กับเทคโนโลยีที่ดีที่สุดตลอดเส้นทางการวิเคราะห์ธุรกิจของคุณ เราจะเดินทางร่วมกับทีมงานที่มีประสบการณ์ของเรา ร่วมกับคุณเพื่อบูรณาการและควบคุมข้อมูลของคุณ เพื่อคาดการณ์ผลลัพธ์ในอนาคตและเพิ่มประสิทธิภาพการตัดสินใจ และเพื่อให้บรรลุประสิทธิภาพและนวัตกรรมรุ่นต่อไป

เผยแพร่โดย SIFT Analytics – SIFT Thailand Marketing Team

Imagine what happens when someone who is passionate about their work and your company frequently runs into issues with their hardware or software. It leads to a bad experience and they eventually lose interest in their work and your organization. This results in poor employee experience that leads to higher attrition rates and occasionally a poor customer experience.

The success of an organization is not just dependent on the product or service they sell but is also based on the people who are part of it. Processes that stand in their way or slows them down hurts the employee experience.

Connect with SIFT Analytics

As organisations strive to meet the demands of the digital era, SIFT remains steadfast in its commitment to delivering transformative solutions. To explore digital transformation possibilities or learn more about SIFT’s pioneering work, contact the team for a complimentary consultation. Visit the website at www.sift-ag.com for additional information.

About SIFT Analytics

Get a glimpse into the future of business with SIFT Analytics, where smarter data analytics driven by smarter software solution is key. With our end-to-end solution framework backed by active intelligence, we strive towards providing clear, immediate and actionable insights for your organisation.

Headquartered in Singapore since 1999, with over 500 corporate clients, in the region, SIFT Analytics is your trusted partner in delivering reliable enterprise solutions, paired with best-of-breed technology throughout your business analytics journey. Together with our experienced teams, we will journey. Together with you to integrate and govern your data, to predict future outcomes and optimise decisions, and to achieve the next generation of efficiency and innovation.

Published by SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+65 6295 0112

SIFT Analytics Group

Explore our latest insights

ปฏิเสธไม่ได้เลยว่าในยุคที่ทุกองค์กรกำลังเปลี่ยนแปลงให้เกิด Digital transformation นวัตกรรมปัญญาประดิษฐ์เชิงสร้างสรรค์ (Generative Artificial Intelligence) หรือ Generative AI ก็ได้ถูกนำมาใช้อย่างแพร่หลาย ด้วยความสามารถในการสร้างสรรค์สิ่งต่าง ๆ ได้อย่างชาญฉลาด ไม่ว่าจะเป็นรูปภาพ วิดีโอ เสียง ข้อความ โมเดลสามมิติ และอื่น ๆ ซึ่งนับเป็นผู้ช่วยที่มาลดระยะเวลาการทำงานในปัจจุบันได้เป็นอย่างดี

จึงเป็นเหตุผลที่ Alteryx ได้เปิดตัว AI อย่าง Alteryx AiDIN ซึ่งเป็น AI Engine ที่ผนึกความสามารถระหว่าง Generative AI และ Machine Learning (ML) ให้มาอยู่บน Alteryx Analytics Platform เพื่อเพิ่มประสิทธิภาพและคุณค่าทางธุรกิจให้คุณมากยิ่งขึ้นในหลาย ๆ ด้าน อาทิเช่น

Alteryx AiDIN ทำงานเข้ากับ Alteryx platform ได้ครอบคลุมหลากหลายคุณสมบัติ ไม่ว่าจะเป็น

WHITE PAPER The Alteryx Approach to Generative AI For Analytics,4

ในขณะที่ความสามารถของ Generative AI พัฒนาขึ้นในทุกวัน Alteryx platform ก็ได้พัฒนาแอปพลิเคชันใหม่ๆ เพื่อรองรับนวัตกรรม AI และประสานความสามารถและความต้องการของผู้ใช้งานให้ตอบโจทย์ทางธุรกิจ อีกทั้งยังคงการรักษาความปลอดภัยระดับองค์กรเอาไว้ เช่นเดียวกันกับ SIFT Analytics Group ที่เล็งเห็นถึงความสำคัญของการนำเอาโซลูชั่นที่ตอบโจทย์ทางธุรกิจใหม่ ๆ เข้ามาเพื่อพัฒนาการทำงานของท่านให้รวดเร็ว ชัดเจน และเฉียบคมอยู่เสมอ

หากท่านต้องการทราบข้อมูลเพิ่มเติมเกี่ยวกับ Alteryx platform สามารถติดต่อทีมงานของ Sift Analytics Group ได้เลย เพราะ ที่ Sift Analytics Group เราไม่เพียงแค่จัดจำหน่ายซอฟท์แวร์สำหรับการวิเคราะห์ข้อมูล แต่เรายังมีบริการครอบคลุมถึงขั้นตอนการให้คำแนะนำเพื่อนำธุรกิจของท่านไปสู่ความสำเร็จจากการวิเคราะห์ข้อมูลที่แม่นยำและสร้างการตัดสินใจที่ถูกต้องอีกด้วย

เชื่อมต่อกับ SIFT Analytics

ในยุคที่องค์กรต่าง ๆ ต้องการก้าวให้ทันทุกความเปลี่ยนแปลงด้านดิจิทัล SIFT เองยังคงมุ่งมั่นในการนำเสนอโซลูชันที่จะมาสร้างการเปลี่ยนแปลงทางธุรกิจให้กับลูกค้าของเราอยู่เสมอ หากต้องการเรียนรู้เพิ่มเติมเพื่อเริ่มต้นการเปลี่ยนแปลงทางดิจิทัลกับ SIFT สามารถติดต่อทีมงานของเราเพื่อขอคำปรึกษาฟรี หรือยี่ยมชมเว็บไซต์ของเราเพื่อค้นหาข้อมูลเพิ่มเติมได้ที่ www.sift-ag.com

เกี่ยวกับ SIFT Analytics

ก้าวทันอนาคตของธุรกิจอย่างชาญฉลาดยิ่งขึ้นด้วย SIFT Analytics ผู้ให้บริการการวิเคราะห์ข้อมูลซึ่งขับเคลื่อนโดยโซลูชันซอฟต์แวร์แบบครบวงจรและถูกพัฒนาด้วยระบบอัฉริยะเชิงรุก (Active intelligence) ที่ล้ำสมัย เรามุ่งมั่นที่จะมอบข้อมูลเชิงลึกที่ชัดเจนและนำไปใช้ได้ทันทีสำหรับองค์กรของคุณ

เรามีสำนักงานใหญ่ในสิงคโปร์ตั้งแต่ปี 1999 และมีลูกค้าองค์กรมากกว่า 500 รายในภูมิภาค SIFT Analytics เป็นพันธมิตรที่เชื่อถือได้ของคุณในการนำเสนอโซลูชันระดับองค์กรที่เชื่อถือได้ จับคู่กับเทคโนโลยีที่ดีที่สุดตลอดเส้นทางการวิเคราะห์ธุรกิจของคุณ เราจะเดินทางร่วมกับทีมงานที่มีประสบการณ์ของเรา ร่วมกับคุณเพื่อบูรณาการและควบคุมข้อมูลของคุณ เพื่อคาดการณ์ผลลัพธ์ในอนาคตและเพิ่มประสิทธิภาพการตัดสินใจ และเพื่อให้บรรลุประสิทธิภาพและนวัตกรรมรุ่นต่อไป

เผยแพร่โดย SIFT Analytics – SIFT Thailand Marketing Team

Trong ngành logistics ngày nay, ứng dụng phân tích dữ liệu đang trở thành chìa khóa để tối ưu hóa quy trình và nâng cao hiệu suất chuỗi cung ứng.

Áp dụng phân tích dữ liệu vào Logicstics – Dễ dàng kiểm soát hàng hoá, vận xe.

1

Dự Báo Nhu Cầu

Phân tích dữ liệu giúp dự báo chính xác về nhu cầu thị trường, từ đó giúp các doanh nghiệp logistics lên kế hoạch vận chuyển và quản lý tồn kho một cách hiệu quả.

2

Tối Ưu Hóa Tuyến Đường Vận Chuyển

Bằng cách sử dụng dữ liệu vận chuyển lịch sử và thông tin đa nguồn, ứng dụng phân tích giúp tối ưu hóa tuyến đường, giảm chi phí vận chuyển và thời gian giao hàng.

3

Quản Lý Tồn Kho Thông Minh

Phân tích dữ liệu cung cấp thông tin chi tiết về lượng tồn kho và luồng hàng hóa. Điều này giúp doanh nghiệp logistics quản lý tồn kho một cách thông minh và giảm mức tồn kho không cần thiết.

4

Đối Phó với Rủi Ro

Phân tích dữ liệu giúp đánh giá rủi ro trong chuỗi cung ứng, từ quá trình sản xuất đến vận chuyển. Điều này tạo ra sự linh hoạt để đối phó với sự cố và giảm thiểu tác động tiêu cực.

5

Nâng Cao Hiệu Quả Năng Lực Cảng và Nhà Kho

Bằng cách theo dõi và phân tích dữ liệu về hoạt động cảng và nhà kho, doanh nghiệp có thể nâng cao hiệu suất năng lực và giảm thời gian xếp dỡ.

6

Cải Thiện Dịch Vụ Khách Hàng

Dữ liệu vận chuyển và lịch sử đơn hàng giúp cải thiện dịch vụ khách hàng thông qua việc theo dõi và cung cấp thông tin liên tục về vị trí và tình trạng hàng hóa.

7

Tăng Cường Bảo Mật

Phân tích dữ liệu cung cấp thông tin để theo dõi và ngăn chặn các vấn đề về bảo mật trong quy trình logistics, giữ cho hệ thống an toàn và đáng tin cậy.

Áp dụng phân tích dữ liệu vào Logicstics – Tối ưu hoá tuyến đường vận chuyển.

Với ứng dụng phân tích dữ liệu ngày càng trở nên mạnh mẽ mà SIFT Ananlytics Group mang lại, doanh nghiệp logistics có thể tận dụng những thông tin quý giá này để thúc đẩy sự đổi mới, giảm chi phí và tối ưu hóa mọi khía cạnh của chuỗi cung ứng. Điều này không chỉ giúp doanh nghiệp vận chuyển hiện đại mà còn định hình lại tiêu chuẩn ngành trong tương lai.

Ngoài ra SIFT Analytics Group còn cung cấp nhân sự cho từng dự án về Phân tích dữ liệu của doanh nghiệp hoặc nhân sự đào tạo cho từng phòng ban. Với hơn 25 năm trong lĩnh vực Phân tích dữ liệu, có mặt tại 4 quốc gia. Chúng tôi tin rằng, chúng tôi sẽ mang đén dự hài lòng nhất cho auys Doanh nghiệp.

Kết nối cùng SIFT Analytics

Khi các Doanh nghiệp đang tìm kiếm các giải pháp Chuyển đổi số để hoá nhập nhanh chóng vào kỷ nguyên số, SIFT vẫn luôn sẵn sàng để tư vấn và cung cấp các giải pháp mang tính chuyển đổi cho Doanh nghiệp dựa trên những dữ liệu thực tế nhất. Giúp Doanh nghiệp dễ dàng tiếp cận và hiểu rõ hơn về dữ liệu của mình chính là công việc tiên phong của SIFT, hãy liên hệ với chúng tôi để được tư vấn miễn phí. Hãy truy cập trang web www.sift-ag.com để biết thêm thông tin.

Thông tin về SIFT Analytics

Dự đoán về tương lai của doanh nghiệp với SIFT Analytics bằng các giải pháp phân tích dữ liệu mạnh mẽ và hiện đại bậc nhất đang được các quốc gia phát triển sử dụng. Với khung giải pháp toàn diện được hỗ trợ bởi AI, chúng tôi cố gắng cung cấp những thông tin rõ ràng, tức thời và có thể giúp nhà lãnh đạo đưa ra những chính sách sát với thực tế của doanh nghiệp nhất.

Có trụ sở chính tại Singapore từ năm 1999, với hơn 500 khách hàng doanh nghiệp trong khu vực, SIFT Analytics là đối tác đáng tin cậy của bạn trong việc cung cấp các giải pháp phân tích dữ liệu doanh nghiệp. Chúng tôi cũng kết hợp với việc cung cấp công cụ công nghệ tiên tiến nhất trong suốt hành trình phân tích kinh doanh của bạn. Cùng với đội ngũ hơn 25 kinh nghiệm của chúng tôi, bạn sẽ nhận được DEMO chi tiết những case study sát nhất với tình trạng doanh nghiệp của bạn.

Được xuất bản bới SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+8428 7304 0788

SIFT Analytics Group

Khám phá những thông điệp mới nhất của chúng tôi

Ngành sản xuất hiện đại, việc áp dụng phân tích dữ liệu không chỉ là một chiến lược mà là yếu tố quyết định giúp doanh nghiệp nâng cao hiệu suất và đảm bảo sự cạnh tranh. Đối với ngành sản xuất, phân tích dữ liệu không chỉ là công cụ mà còn là nguồn động lực để chuyển đổi.

1

Đưa Ra Quyết Định Thông Minh

Phân tích dữ liệu giúp doanh nghiệp hiểu rõ quy luật và mô hình trong chuỗi sản xuất. Từ đó, quản lý có thể đưa ra quyết định thông minh về lịch trình sản xuất, quản lý tồn kho, và tối ưu hóa quy trình làm việc.

2

Tối Ưu Hóa Hiệu Suất Máy Móc

Bằng cách theo dõi và phân tích dữ liệu từ các thiết bị và máy móc sản xuất, doanh nghiệp có thể đảm bảo rằng chúng đang hoạt động ở mức hiệu suất tối ưu. Điều này giúp giảm thiểu thời gian chết, tăng năng suất, và giảm chi phí bảo dưỡng.

3

Dự Báo Nhu Cầu Thị Trường

Phân tích dữ liệu không chỉ giúp theo dõi quá trình sản xuất mà còn hỗ trợ trong dự báo nhu cầu thị trường. Dự đoán chính xác về xu hướng tiêu dùng giúp doanh nghiệp điều chỉnh sản xuất và tồn kho một cách linh hoạt.

Phân tích dữ liệu trong ngành sản xuất, dễ dàng theo dỗi quy trình vận hành.

4

Tăng Cường Chất Lượng Sản Phẩm

Bằng cách theo dõi các chỉ số chất lượng và phản hồi từ khách hàng, phân tích dữ liệu giúp xác định nguyên nhân của các vấn đề liên quan đến chất lượng sản phẩm. Điều này tạo điều kiện cho việc cải thiện liên tục và tăng cường hình ảnh thương hiệu.

5

Tiết Kiệm Năng Lượng và Tài Nguyên

Phân tích dữ liệu không chỉ giúp doanh nghiệp tối ưu hóa sự sử dụng năng lượng mà còn giảm lượng chất thải và tài nguyên không cần thiết. Điều này đồng thời đáp ứng yêu cầu về bảo vệ môi trường và giảm chi phí sản xuất.

Phân tích dữ liệu trong ngành sản xuất, kịp thời thay đổi chính sách thực tế..

Trong bối cảnh ngành sản xuất ngày càng cạnh tranh, việc áp dụng phân tích dữ liệu không chỉ là một lợi thế mà còn là yếu tố quyết định sự thành công. Đối với những doanh nghiệp mong muốn đổi mới và thích ứng, phân tích dữ liệu là chìa khóa mở cánh cửa cho hiệu suất và sự cạnh tranh tối đa.

SIFT Analytics tự tin có thể mang đến những giải pháp về Phân tích dữ liệu có sẵn của doanh nghiệp. Giờ đây nhà quản lý có thể dễ dàng đọc và hiểu rõ hơn về các con số, nhằm khắc phục những vấn đề mà doanh nghiệp đang gặp phải dựa trên những dữ liệu thực tế nhất.

Nhanh chóng liên hệ với chúng tôi để chia sẽ những mong muốn của bạn và nhận về những tư vấn nhiệt tình dựa trên 25 năm kinh nghiệm của chúng tôi.

Kết nối cùng SIFT Analytics

Khi các Doanh nghiệp đang tìm kiếm các giải pháp Chuyển đổi số để hoá nhập nhanh chóng vào kỷ nguyên số, SIFT vẫn luôn sẵn sàng để tư vấn và cung cấp các giải pháp mang tính chuyển đổi cho Doanh nghiệp dựa trên những dữ liệu thực tế nhất. Giúp Doanh nghiệp dễ dàng tiếp cận và hiểu rõ hơn về dữ liệu của mình chính là công việc tiên phong của SIFT, hãy liên hệ với chúng tôi để được tư vấn miễn phí. Hãy truy cập trang web www.sift-ag.com để biết thêm thông tin.

Thông tin về SIFT Analytics

Dự đoán về tương lai của doanh nghiệp với SIFT Analytics bằng các giải pháp phân tích dữ liệu mạnh mẽ và hiện đại bậc nhất đang được các quốc gia phát triển sử dụng. Với khung giải pháp toàn diện được hỗ trợ bởi AI, chúng tôi cố gắng cung cấp những thông tin rõ ràng, tức thời và có thể giúp nhà lãnh đạo đưa ra những chính sách sát với thực tế của doanh nghiệp nhất.

Có trụ sở chính tại Singapore từ năm 1999, với hơn 500 khách hàng doanh nghiệp trong khu vực, SIFT Analytics là đối tác đáng tin cậy của bạn trong việc cung cấp các giải pháp phân tích dữ liệu doanh nghiệp. Chúng tôi cũng kết hợp với việc cung cấp công cụ công nghệ tiên tiến nhất trong suốt hành trình phân tích kinh doanh của bạn. Cùng với đội ngũ hơn 25 kinh nghiệm của chúng tôi, bạn sẽ nhận được DEMO chi tiết những case study sát nhất với tình trạng doanh nghiệp của bạn.

Được xuất bản bới SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+8428 7304 0788

SIFT Analytics Group

Khám phá những thông điệp mới nhất của chúng tôi

Trong thời đại số ngày nay, chuyển đổi số đang định hình lại cách chúng ta quản lý và tận hưởng dịch vụ tài chính. Đối với ngành tài chính, điều này không chỉ là một xu hướng mà là sự phải đối mặt để duy trì sự cạnh tranh.

Chuyển đổi số trong tài chính mang lại sự thuận tiện với việc quản lý tài khoản, thanh toán và đầu tư mọi lúc, mọi nơi. Các ứng dụng di động và trang web tài chính không chỉ giúp giảm giờ làm việc mà còn tạo ra trải nghiệm cá nhân hóa cho từng khách hàng.

Điều quan trọng là chuyển đổi số không chỉ là về công nghệ mà còn đến từ sự thay đổi văn hóa. Các ngân hàng, công ty tài chính đang áp dụng sự linh hoạt và đổi mới để đáp ứng nhanh chóng với sự biến động của thị trường và mong muốn của khách hàng.

Chuyển đổi số trong ngành Tài chính

Bằng cách áp dụng chuyển đổi số, ngành tài chính không chỉ tối ưu hóa quy trình nội bộ mà còn tạo ra một môi trường làm việc linh hoạt và sáng tạo. Điều này không chỉ làm tăng cường hiệu suất mà còn giúp ngành tài chính đáp ứng nhanh chóng với thách thức của môi trường kinh doanh đang biến đổi.

Tóm lại, chuyển đổi số trong ngành tài chính không chỉ mang lại lợi ích về mặt công nghệ mà còn làm thay đổi cách chúng ta tương tác với dịch vụ tài chính. Sự đổi mới và linh hoạt đang là chìa khóa mở cánh cửa cho một tương lai tài chính số đa dạng và đầy tiềm năng.

Xem những mô hình Chuyển đổi số, đặt biệt là những công cụ Phân tích dữ liệu cho ngành tài chính, các bạn nhanh chóng liên hệ SIFT Analytics để các chuyên gia của chúng tôi Demo chi tiết hơn.

Khám phá những thông điệp mới nhất của chúng tôi

Chăm sóc khách hàng hiện đại được xem là trụ cột của thành công kinh doanh. Trong thời đại số hóa, việc xây dựng mối quan hệ chặt chẽ với khách hàng đòi hỏi phải có chiến lược và linh hoạt. Dưới đây là một số chiến lược chăm sóc khách hàng có thể giúp doanh nghiệp tăng cường tương tác và duy trì sự hài lòng của khách hàng.

1. Hiểu Rõ Khách Hàng hơn với Freshworks:

Bước đầu tiên là hiểu rõ khách hàng của bạn. Phân tích dữ liệu để nắm bắt xu hướng, nhu cầu, và mong muốn của họ. Điều này giúp cá nhân hóa dịch vụ và tạo ra trải nghiệm tối ưu.

2. Freshworks Cung Cấp Dịch Vụ 24/7:

Thế giới hiện đại đòi hỏi sự linh hoạt trong thời gian phục vụ. Một hệ thống chăm sóc khách hàng hoạt động 24/7 qua các kênh trực tuyến giúp giải quyết vấn đề và đáp ứng yêu cầu ngay lập tức.

3. Sử Dụng Freshchat và Freddy AI để hỗ trợ khách hàng:

Chatbot và Freddy AI là những công cụ mạnh mẽ giúp tự động hóa quy trình hỗ trợ và cung cấp thông tin nhanh chóng. Điều này giúp giảm thời gian chờ đợi và tăng cường trải nghiệm khách hàng. Và

4. Tạo Cộng Đồng Trực Tuyến:

Xây dựng một cộng đồng trực tuyến giúp khách hàng chia sẻ kinh nghiệm, ý kiến, và giúp đỡ lẫn nhau. Điều này không chỉ tạo ra một không gian tương tác mà còn củng cố lòng trung thành của khách hàng.

5. Phản Hồi Liên Tục:

Hãy chú ý đến phản hồi từ khách hàng. Sử dụng các công cụ đánh giá và khảo sát để đo lường sự hài lòng của họ và liên tục cải thiện dịch vụ dựa trên phản hồi đó.

6. Hỗ Trợ Đa Kênh cùng Freshdesk:

Tích hợp các kênh liên lạc như email, điện thoại, trực tuyến, và mạng xã hội để khách hàng có nhiều cơ hội để kết nối. Điều này tạo ra sự thuận tiện và tăng khả năng tiếp cận.

7. Giải Quyết Vấn Đề Hiệu Quả cùng Freshsales:

Hệ thống giải quyết vấn đề nhanh chóng và hiệu quả giúp tăng cường niềm tin của khách hàng. Chăm sóc khách hàng tốt không chỉ là việc giải quyết vấn đề mà còn là cách chúng ta giải quyết chúng.

8. Tạo Nội Dung Giáo Dục với Fresh Service:

Cung cấp thông tin giáo dục giúp khách hàng hiểu rõ hơn về sản phẩm và dịch vụ của bạn. Blog, video hướng dẫn, và tài liệu hỗ trợ đều là cách tuyệt vời để chia sẻ kiến thức.

Chăm sóc khách hàng không chỉ là quá trình tạo ra giao dịch, mà còn là việc xây dựng mối quan hệ lâu dài. Bằng cách triển khai những chiến lược này, doanh nghiệp có thể xây dựng một cộng đồng trung thành và phát triển bền vững trong thị trường ngày nay.

Khám phá những thông điệp mới nhất của chúng tôi