ในยุคที่ข้อมูล (Data) เข้ามามีบทบาทสำคัญในการพัฒนาองค์กร ส่งผลให้การเรียนรู้ของเครื่อง (Machine Learning) และ วิทยาศาสตร์ข้อมูล (Data Science) เข้ามามีผลในการวิเคราะห์ข้อมูล (Data Analytics) ธุรกิจขององค์กรและบริษัทเพิ่มมากขึ้นเรื่อยๆ แต่อย่างไรก็ตามไม่ใช่ทุกองค์กรหรือบริษัทที่จะมีทีมนักวิทยาศาสตร์ข้อมูล (Data Scientist) ที่จะทำงานทางด้านนี้โดยตรง หรือบางองค์กรก็มีทีมขนาดเล็ก ส่งผลให้เมื่อองค์กรต้องการพัฒนาโครงการวิทยาศาสตร์ข้อมูลหรือ Machine Learning 100 โครงการ ทีมนักวิทยาศาสตร์ข้อมูลจะสามารถมุ่งเน้นได้แค่ 10 อันดับแรก ที่ส่งผลกระทบใหญ่กับธุรกิจหลักโดยตรง และมีกระบวนการขั้นตอนวิธีการที่ลึกซึ้ง ซับซ้อนมาก ใช้ทรัพยากรและเวลามาก แล้วโครงการอื่นๆ ที่เหลือละ? จะปล่อยมันทิ้งไว้เฉย ๆ เลยหรือ ?

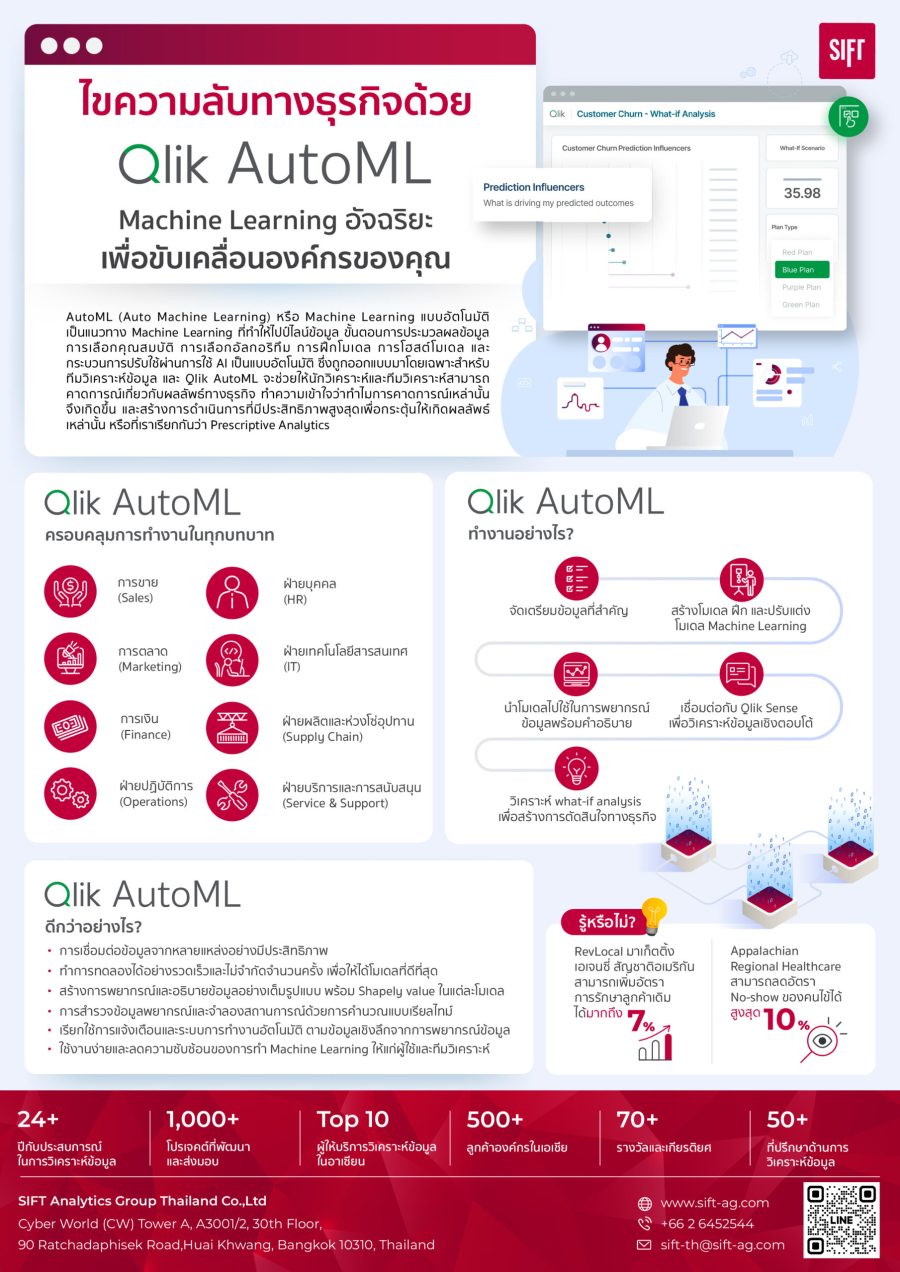

จึงเป็นที่มาของ Qlik AutoML ที่เข้ามาส่งเสริมช่วยเหลือรองรับโครงการอีก 90% ที่เหลือ เพื่อช่วยให้นักวิเคราะห์ข้อมูล (Data Analyst) และนักวิเคราะห์ธุรกิจ (Business Analyst) ของคุณสามารถวิเคราะห์ข้อมูลเชิงลึกเชิงคาดการณ์ (Predictive Insight) เพื่อนำไปแก้ไขปัญหาทางธุรกิจได้เพิ่มมากขึ้น

Machine Learning (ML) เป็นสาขาหนึ่งของนวัตกรรมปัญญาประดิษฐ์ (AI) ที่มุ่งเน้นไปที่กระบวนการรับรู้รูปแบบในข้อมูลในอดีตเพื่อคาดการณ์ผลลัพธ์ในอนาคต โดยใช้ข้อมูลที่สังเกตได้ในอดีตเป็นอินพุต และใช้กระบวนการทางคณิตศาสตร์กับข้อมูลเหล่านั้น เพื่อสร้างเอาต์พุต ที่เรียกว่าโมเดลการเรียนรู้ของระบบคอมพิวเตอร์ ตามรูปแบบข้อมูลในอดีต ซึ่งโมเดลนี้สามารถใช้เพื่อคาดการณ์และทดสอบสถานการณ์ในอนาคตได้

ส่วน AutoML (Auto Machine Learning) หรือ Machine Learning แบบอัตโนมัติ เป็นแนวทาง Machine Learning ที่ทำให้ไปป์ไลน์ข้อมูล ขั้นตอนการประมวลผลข้อมูล การเลือกคุณสมบัติ การเลือกอัลกอริทึม การฝึกโมเดล การโฮสต์โมเดล และกระบวนการปรับใช้ผ่านการใช้ AI เป็นแบบอัตโนมัติ ซึ่งถูกออกแบบมาโดยเฉพาะสำหรับทีมวิเคราะห์ข้อมูล

และ Qlik AutoML จะช่วยให้นักวิเคราะห์และทีมวิเคราะห์สามารถคาดการณ์เกี่ยวกับผลลัพธ์ทางธุรกิจ ทำความเข้าใจว่าทำไมการคาดการณ์เหล่านั้นจึงเกิดขึ้น และสร้างการดำเนินการที่มีประสิทธิภาพสูงสุดเพื่อกระตุ้นให้เกิดผลลัพธ์เหล่านั้น หรือที่เราเรียกกันว่า Prescriptive Analytics

การขาย (Sales)

การตลาด (Marketing)

การเงิน (Finance)

ฝ่ายปฏิบัติการ (Operations)

ฝ่ายบุคคล (HR)

ฝ่ายเทคโนโลยีสารสนเทศ (IT)

ฝ่ายผลิตและห่วงโซอุปทาน (Supply Chain)

ฝ่ายบริการและการสนับสนุน (Service & Support)

ที่มา: Qlik AutoML Machine Learning for Analytics Teams 2023,18

Qlik Cloud นำเสนอการทำงานของ AutoML ตั้งแต่ต้นจนจบ โดยสามารถสร้างและนำโมเดลไปใช้งานโดยง่าย ไม่ต้องเขียนโค้ด

Qlik AutoML รองรับการทำงานตั้งแต่ขั้นตอนจัดเตรียมข้อมูล เลือกข้อมูลที่สำคัญ สร้างโมเดล ฝึก ปรับแต่ง นำโมเดลไปใช้ในการพยากรณ์ข้อมูลพร้อมอธิบาย จากนั้นบูรณาการเชื่อมต่อกับ Qlik Sense แอพและแดชบอร์ด เพื่อวิเคราะห์ข้อมูลเชิงตอบโต้ และวิเคราะห์ what-if analysis ทำให้ลูกค้าสามารถวางแผนการตัดสินใจและการดำเนินการทางธุรกิจ

ด้วยความสามารถอัจฉริยะของ Qlik AutoML จึงทำให้มันแตกต่างจาก Machine Learning ที่คุณคุ้นเคย ไม่ว่าจะเป็น

ซึ่งความสามารถเหล่านี้จะทำให้การทำงานของคุณง่ายขึ้น รวดเร็วขึ้น และสามารถขับเคลื่อนองค์กรด้วยการสร้างการตัดสินใจอย่างแม่นยำมากยิ่งขึ้นนั่นเอง หากสนใจเรียนรู้เพิ่มเติมเกี่ยวกับ Qlik AutoML สามารถติดต่อทีมงานของ Sift Analytics Group ได้เลย

เพราะ ที่ Sift Analytics Group เราไม่เพียงแค่จัดจำหน่ายซอฟท์แวร์สำหรับการวิเคราะห์ข้อมูล แต่เรายังมีบริการครอบคลุมถึงขั้นตอนการให้คำแนะนำเพื่อนำธุรกิจของท่านไปสู่ความสำเร็จจากการวิเคราะห์ข้อมูลที่แม่นยำและสร้างการตัดสินใจที่ถูกต้องอีกด้วย

ที่มาของเนื้อหา:

Qlik AutoML Machine Learning for Analytics Teams 2023

https://www.qlik.com/us/-/media/files/resource-library/global-us/direct/datasheets/ds-qlik-automl-machine-learning-for-your-analytics-teams-en.pdf?rev=16d86fa7318e4a32b4290a65e7d296fc

เชื่อมต่อกับ SIFT Analytics

ในยุคที่องค์กรต่าง ๆ ต้องการก้าวให้ทันทุกความเปลี่ยนแปลงด้านดิจิทัล SIFT เองยังคงมุ่งมั่นในการนำเสนอโซลูชันที่จะมาสร้างการเปลี่ยนแปลงทางธุรกิจให้กับลูกค้าของเราอยู่เสมอ หากต้องการเรียนรู้เพิ่มเติมเพื่อเริ่มต้นการเปลี่ยนแปลงทางดิจิทัลกับ SIFT สามารถติดต่อทีมงานของเราเพื่อขอคำปรึกษาฟรี หรือยี่ยมชมเว็บไซต์ของเราเพื่อค้นหาข้อมูลเพิ่มเติมได้ที่ www.sift-ag.com

เกี่ยวกับ SIFT Analytics

ก้าวทันอนาคตของธุรกิจอย่างชาญฉลาดยิ่งขึ้นด้วย SIFT Analytics ผู้ให้บริการการวิเคราะห์ข้อมูลซึ่งขับเคลื่อนโดยโซลูชันซอฟต์แวร์แบบครบวงจรและถูกพัฒนาด้วยระบบอัฉริยะเชิงรุก (Active intelligence) ที่ล้ำสมัย เรามุ่งมั่นที่จะมอบข้อมูลเชิงลึกที่ชัดเจนและนำไปใช้ได้ทันทีสำหรับองค์กรของคุณ

เรามีสำนักงานใหญ่ในสิงคโปร์ตั้งแต่ปี 1999 และมีลูกค้าองค์กรมากกว่า 500 รายในภูมิภาค SIFT Analytics เป็นพันธมิตรที่เชื่อถือได้ของคุณในการนำเสนอโซลูชันระดับองค์กรที่เชื่อถือได้ จับคู่กับเทคโนโลยีที่ดีที่สุดตลอดเส้นทางการวิเคราะห์ธุรกิจของคุณ เราจะเดินทางร่วมกับทีมงานที่มีประสบการณ์ของเรา ร่วมกับคุณเพื่อบูรณาการและควบคุมข้อมูลของคุณ เพื่อคาดการณ์ผลลัพธ์ในอนาคตและเพิ่มประสิทธิภาพการตัดสินใจ และเพื่อให้บรรลุประสิทธิภาพและนวัตกรรมรุ่นต่อไป

เผยแพร่โดย SIFT Analytics – SIFT Thailand Marketing Team

Trong thời đại số hóa, quyết định dựa trên dữ liệu chính xác và trực quan là chìa khóa để đưa doanh nghiệp đến thành công. Qlik Sense, một giải pháp trực quan hoá dữ liệu hàng đầu. Không chỉ là công cụ, mà là một trải nghiệm đưa bạn đến với sức mạnh của thông tin.

1. Trực quan hoá dữ liệu nhanh chóng

Qlik Sense không chỉ giúp bạn hiểu dữ liệu một cách nhanh chóng mà còn biến chúng thành các biểu đồ, đồ thị phức tạp một cách mạch lạc.

2. Khả năng kết nối đa nguồn dữ liệu

Với khả năng kết nối đa nguồn dữ liệu, từ bảng Excel đến cơ sở dữ liệu lớn, Qlik Sense giúp tạo nên cái nhìn toàn diện về dữ liệu.

3. Tích hợp học máy

Qlik Sense không chỉ dừng lại ở việc hiển thị dữ liệu mà còn tích hợp công nghệ học máy, giúp dự đoán xu hướng và phân tích sâu sắc.

4. Trải nghiệm người dùng mạnh mẽ

Giao diện trực quan và thân thiện với người dùng, giúp mọi thành viên trong doanh nghiệp có thể sử dụng Qlik Sense một cách dễ dàng.

5. Bảo mật cao

Với các tính năng bảo mật tiên tiến, Qlik Sense đảm bảo rằng dữ liệu của bạn được bảo vệ tối đa mà vẫn đảm bảo tính linh hoạt.

Dữ liệu trực quan hoá All – in – one

Tất cả dữ liệu được chuyển hoá thành hình ảnh và được số hoá chi tiết.

Hiểu Rõ Hơn

Dữ liệu trực quan giúp mọi người hiểu rõ hơn về bức tranh tổng thể và chi tiết.

Ra Quyết Định Nhanh Chóng

Có thể đưa ra quyết định dựa trên dữ liệu một cách nhanh chóng và chính xác.

Tạo Đà Cho Sự Đổi Mới

Trực quan hoá dữ liệu là bước đầu tiên để tạo đà cho sự đổi mới và sáng tạo trong doanh nghiệp.

Tăng Hiệu Quả Công Việc

Giúp tăng cường hiệu quả công việc và giảm thời gian xử lý thông tin.

Qlik Sense sẽ không chỉ là lựa chọn, mà là sự đầu tư đúng đắn cho sự phát triển của doanh nghiệp. Đặt bước đi chính xác với Qlik Sense hôm nay!

Khám phá những thông điệp mới nhất của chúng tôi

Philadelphia – Recent research by Qlik shows that enterprises are planning significant investments in technologies that enhance data fabrics to enable generative AI success, and are looking to a hybrid approach that incorporates generative AI with traditional AI to scale its impact across their organizations.

The “Generative AI Benchmark Report”, executed in August 2023 by Enterprise Technology Research (ETR) on behalf of Qlik, surveyed 200 C-Level executives, VPs, and Directors from Global 2000 firms across multiple industries. The survey explores how leaders are leveraging the generative AI tools they purchased, lessons learned, and where they are focusing to maximize their generative AI investments.

“Generative AI’s potential has ignited a wave of investment and interest both in discreet generative AI tools, and in technologies that help organizations manage risk, embrace complexity and scale generative AI and traditional AI for impact,” said James Fisher, Chief Strategy Officer at Qlik. “Our Generative AI Benchmark report clearly shows leading organizations understand that these tools must be supported by a trusted data foundation. That data foundation fuels the insights and advanced use cases where the power of generative AI and traditional AI together come to life.”

The report found that while the initial excitement of what generative AI can deliver remains, leaders understand they need to surround these tools with the right data strategies and technologies to fully realize their transformative potential. And while many are forging ahead with generative AI to alleviate competitive pressures and gain efficiencies, they are also looking for guidance on where to start and how to move forward quickly while keeping an eye on risk and governance issues.

Creating Value from Generative AI

Even with the market focus on generative AI, respondents noted they clearly see traditional AI still bringing ongoing value in areas like predictive analytics. Where they expect generative AI to help is in extending the power of AI beyond data scientists or engineers, opening up AI capabilities to a larger population. Leaders expect this approach will help them scale the ability to unlock deeper insights and find new, creative ways to solve problems much faster.

This vision of what’s possible with generative AI has driven an incredible level of investment. 79% of respondents have either purchased generative AI tools or invested in generative AI projects, and 31% say they plan to spend over $10 million on generative AI initiatives in the coming year. However, those investments run the risk of being siloed, since 44% of these organizations noted they lack a clear generative AI strategy.

Surrounding Generative AI with the Right Strategy and Support

When asked how they intend to approach generative AI, 68% said they plan to leverage public or open-source models refined with proprietary data, and 45% are considering building models from scratch with proprietary data.

Expertise in these areas is crucial to avoiding the widely reported data security, governance, bias and hallucination issues that can occur with generative AI. Respondents understand they need help, with 60% stating they plan to rely partially or fully on third-party expertise to close this gap.

Many organizations are also looking to data fabrics as a core part of their strategy to mitigate these issues. Respondents acknowledged their data fabrics either need upgrades or aren’t ready when it comes to generative AI. In fact, only 20% believe their data fabric is very/extremely well equipped to meet their needs for generative AI.

Given this, it’s no surprise that 73% expect to increase spend on technologies that support data fabrics. Part of that spend will need to focus on managing data volumes, since almost three quarters of respondents said they expect generative AI to increase the amount of data moved or managed on current analytics. The majority of respondents also noted that data quality, ML/AI tools, data governance, data integration and BI/Analytics all are important or very important areas to delivering a data fabric that enables generative AI success. Investments in these areas will help organizations remove some of the most common barriers to implementation per respondents, including regulation, data security and resources.

The Path to Generative AI Success – It’s All About the Data

While every organization’s AI strategy can and should be different, one fact remains the same: the best AI outcomes start with the best data. With the massive amount of data that needs to be curated, quality-assured, secured, and governed to support AI and construct useful generative AI models, a modern data fabric is essential. And once data is in place, the platform should deliver end-to-end, AI-enabled capabilities that help all users – regardless of skill level – get powerful insights with automation and assistance. Qlik enables customers to leverage AI in three critical ways:

To learn more about how Qlik is helping organizations drive success with AI, visit Qlik Staige.

Connect with SIFT Analytics

As organisations strive to meet the demands of the digital era, SIFT remains steadfast in its commitment to delivering transformative solutions. To explore digital transformation possibilities or learn more about SIFT’s pioneering work, contact the team for a complimentary consultation. Visit the website at www.sift-ag.com for additional information.

About SIFT Analytics

Get a glimpse into the future of business with SIFT Analytics, where smarter data analytics driven by smarter software solution is key. With our end-to-end solution framework backed by active intelligence, we strive towards providing clear, immediate and actionable insights for your organisation.

Headquartered in Singapore since 1999, with over 500 corporate clients, in the region, SIFT Analytics is your trusted partner in delivering reliable enterprise solutions, paired with best-of-breed technology throughout your business analytics journey. Together with our experienced teams, we will journey. Together with you to integrate and govern your data, to predict future outcomes and optimise decisions, and to achieve the next generation of efficiency and innovation.

Published by SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+65 6295 0112

SIFT Analytics Group

Explore our latest insights

Connect data sources, apps, business logic, analytics and people

Train your models in a trusted, controlled environment

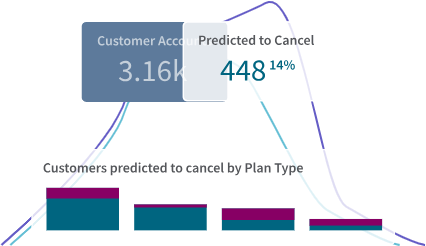

Reduce guesswork with visualizations and predictive insights

Chat-based summary generation

What should our staffing levels be next year?

┗ What do we need to do now to meet those needs?

What do we expect our revenue to be in Q3 for each region?

┗ What actions can we take to maximize revenue?

Which inventory outages might occur next year?

┗ How can we ensure we have the right products on hand?

Which customers should we target going forward?

┗Which products should we offer them?

Which capital investments should we make in Q2?

┗ Which characteristics on an asset drive the highest ROI?

What do we project our expenses to be next quarter by category?

┗ What are the key factors driving expenses?

Which high-value employees are at an increased risk of leaving?

┗ What are the specific factors driving that decision?

How should we plan our capacity next year to best meet demand?

┗ How can we optimise our processes to reduce bottlenecks?

Which opportunities are likely to close this quarter?

┗ How do we create a higher close rate?

Prepare quality governed data for generative AI you can trust.

Bring AI to BI for analytics that stay one step ahead.

Build and deploy AI for advanced use cases.

Connect with SIFT Analytics

As organisations strive to meet the demands of the digital era, SIFT remains steadfast in its commitment to delivering transformative solutions. To explore digital transformation possibilities or learn more about SIFT’s pioneering work, contact the team for a complimentary consultation. Visit the website at www.sift-ag.com for additional information.

About SIFT Analytics

Get a glimpse into the future of business with SIFT Analytics, where smarter data analytics driven by smarter software solution is key. With our end-to-end solution framework backed by active intelligence, we strive towards providing clear, immediate and actionable insights for your organisation.

Headquartered in Singapore since 1999, with over 500 corporate clients, in the region, SIFT Analytics is your trusted partner in delivering reliable enterprise solutions, paired with best-of-breed technology throughout your business analytics journey. Together with our experienced teams, we will journey. Together with you to integrate and govern your data, to predict future outcomes and optimise decisions, and to achieve the next generation of efficiency and innovation.

Contact SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+65 6295 0112

SIFT Analytics Group

Explore our latest insights

Tech decoupling, “privacy debt,” skilled-labor shortages, and dwindling VC funding. With the chaos of our post-pandemic world, nearly 7 out of 10 global tech leaders worry about the growing price tag for technology investments needed to stay competitive.* Which BI and data trends are looming as a result ― and what do you need to know to stay ahead?

Join us for Calibrate for Crisis: Top 10 BI & Data Trends 2023 webinar. We’ll reveal the top 10 trends that will impact organizations over the coming year, shaped by two key ideas:

Calibrating your decision

Hone your decision accuracy at speed and scale to better react and adapt to unexpected events.

Calibrating your integration

Achieve connected governance by accessing, combining, and overseeing distributed data sets to handle a fragmented world.

Industry thought leader Dan Sommer, Senior Director, Market Intelligence at Qlik, will share real-world applications of the trends and predict their impact on the year ahead. He’ll follow the webinar with a live Q&A. Register for the webinar and be the first to receive a copy of the BI & Data Trends 2023 eBook.

Connect with SIFT Analytics

As organisations strive to meet the demands of the digital era, SIFT remains steadfast in its commitment to delivering transformative solutions. To explore digital transformation possibilities or learn more about SIFT’s pioneering work, contact the team for a complimentary consultation. Visit the website at www.sift-ag.com for additional information.

About SIFT Analytics

Get a glimpse into the future of business with SIFT Analytics, where smarter data analytics driven by smarter software solution is key. With our end-to-end solution framework backed by active intelligence, we strive towards providing clear, immediate and actionable insights for your organisation.

Headquartered in Singapore since 1999, with over 500 corporate clients, in the region, SIFT Analytics is your trusted partner in delivering reliable enterprise solutions, paired with best-of-breed technology throughout your business analytics journey. Together with our experienced teams, we will journey. Together with you to integrate and govern your data, to predict future outcomes and optimise decisions, and to achieve the next generation of efficiency and innovation.

Published by SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+65 6295 0112

SIFT Analytics Group

Explore our latest insights

In today’s corporate finance departments, the pressure to do more with less is greater than ever. The most successful finance teams partner with other lines of business to drive growth through data-driven insights, using advanced analytics and predictive models. But translating insights into real-time actions requires real-time analytics that can’t be achieved with traditional business intelligence solutions and dashboards. To unleash the full power of your finance data, you need a new approach.

Join Qlik Chief Data and Analytics Officer, Joe DosSantos and Sven Adler, Qlik Vice President of Finance Planning and Analysis, to explore how you can:

Connect with SIFT Analytics

As organisations strive to meet the demands of the digital era, SIFT remains steadfast in its commitment to delivering transformative solutions. To explore digital transformation possibilities or learn more about SIFT’s pioneering work, contact the team for a complimentary consultation. Visit the website at www.sift-ag.com for additional information.

About SIFT Analytics

Get a glimpse into the future of business with SIFT Analytics, where smarter data analytics driven by smarter software solution is key. With our end-to-end solution framework backed by active intelligence, we strive towards providing clear, immediate and actionable insights for your organisation.

Headquartered in Singapore since 1999, with over 500 corporate clients, in the region, SIFT Analytics is your trusted partner in delivering reliable enterprise solutions, paired with best-of-breed technology throughout your business analytics journey. Together with our experienced teams, we will journey. Together with you to integrate and govern your data, to predict future outcomes and optimise decisions, and to achieve the next generation of efficiency and innovation.

Published by SIFT Analytics

SIFT Marketing Team

marketing@sift-ag.com

+65 6295 0112

SIFT Analytics Group

Explore our latest insights